The core problem here is more fundamental; the servers in the room are taking in a certain amount of electricity, doing work with it, and giving you a (computational) result...as well as shedding waste heat.

Your problem lies in that, as an example, each server sheds 200btu while operating (just to pick a number). You have 6 servers, at 200btu output each max, say, plus networking gear...you need NOTABLY over 1200btu in cooling for this to be cooled. You WILL lose efficiency somewhere. Dust, venting, whatever, and you WILL have heat sources you didn't factor in, like fluorescent lights and so on.

The air conditioner cools to 81F because that's where the air conditioner's likely-direly-overloaded cooling system reaches a new equilibrium with the hotter environment.

If this cooling overload is what I suspect, adding another server MAY bump it only 5 more degrees, say, but adding a second would likely be the end of things. It would reach a runaway point, then the less-efficient electronics would shed even more heat as they ran harder...

At this point, you have a Situation like MY company did (in a server room with some 300 servers as I recall!)...the servers started dropping offline as they reached thermal maximums and tripped shutdowns, with the majority of the rest crashing. When we ran in to check the room, the 'mass failure' was in fact the room being at 120F. Tracking the CPU and ambient temperatures had never been 'a thing' for us, since "we had enough cooling"...we thought. We had recently added 25 more units, and that was more than it could survive.

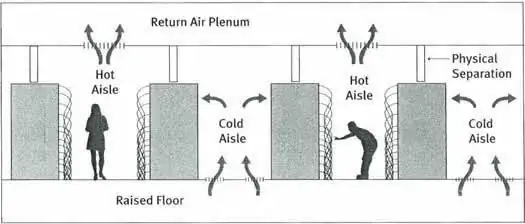

Spacing might change the efficiency of heat removal in the servers themselves, but the room is hot simply because heat isn't being removed from the environment at a whole. More efficiently moving heat from A to B (computer to room), without having a way for B to shed more to C (room to outside/building) in turn, is ultimately self-defeating.

TL;DR variation: The heat generated by converting electricity into webcomics and network shares must be adequately removed by the cooling unit, which it is only barely managing to do right now. Add more air conditioning, or yes, remove a server or two.

In a pinch, turn on power management/CPU throttling on the servers. Let them idle the CPU down while it's not being maximally-used. Turn off monitors, turn off the overhead lights...also check the A/C's filter and coils, check them for dust, which would make it operate less-efficiently. Also, you may wish to track the local CPU core temps; 81F in that room may indicate 140 on the CPU, or who knows what. It's not good, though.

(edited to clarify)