I have an Amazon OpsWorks stack running HAProxy (balance = source) and several node.js instances running socket.io. It seems HAProxy determines the max session limit for a given instance based on the memory limits of that instance, which is fine, but my application can often expect clients to be utilising two pages, both connected to a socket, for upwards of 4 hours.

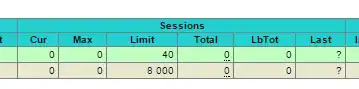

With the max session limit at 40 or 180, I'd only be able to have 20/60 concurrent clients until one disconnects. So with that said, if the limit is reached, other clients will be placed in a queue until a slot becomes free which given the nature of the site is likely to not be for some while. That means I only have a working site for a serious minority.

What's the best way of getting around this? I read several posts where they'll have a backend of 4,000 - 30,000 and a max session limit of just 30 per each server, but how do they achieve this? Is there a setting in HAProxy, or is it more likely they continuously reconnect/disconnect the client either through their actual application?

Edit

To shed some more light on the application - it itself is a PHP application that makes use of sockets for real-time events. These sockets are done so through socket.io with that server being generated using express. This server.js file communicates with an Amazon ElastiCache Redis server (which to my understanding socket.io 1.0 handles all of this backend).

On the client side of things, user's connect to the socket server and emit a connect event so that to join a room unique to them. A user will then load a second page and again emit a connect event and join that same unique room. This then allows for them to emit and receive various events over the course of their session - again, this session can last upwards of 4 hours.

HAProxy routes the user to the same server based upon their IP hash (balance source) - the rest of the options are kept to the OpsWorks default - see the config file below.

I guess what I need to know is if Cur sessions hits 40, and these connections are long-lived (i.e. they don't get readily disconnected), what will happen to those in the queue? It's no good if they are left waiting 4 hours obviously.

--

HAProxy.cfg

global

log 127.0.0.1 local0

log 127.0.0.1 local1 notice

#log loghost local0 info

maxconn 80000

#debug

#quiet

user haproxy

group haproxy

stats socket /tmp/haproxy.sock

defaults

log global

mode http

option httplog

option dontlognull

retries 3

option redispatch

maxconn 80000

timeout client 60s # Client and server timeout must match the longest

timeout server 60s # time we may wait for a response from the server.

timeout queue 120s # Don't queue requests too long if saturated.

timeout connect 10s # There's no reason to change this one.

timeout http-request 30s # A complete request may never take that long.

option httpclose # disable keepalive (HAProxy does not yet support the HTTP keep-alive mode)

option abortonclose # enable early dropping of aborted requests from pending queue

option httpchk # enable HTTP protocol to check on servers health

stats auth strexm:OYk8834nkPOOaKstq48b

stats uri /haproxy?stats

# Set up application listeners here.

listen application 0.0.0.0:80

# configure a fake backend as long as there are no real ones

# this way HAProxy will not fail on a config check

balance source

server localhost 127.0.0.1:8080 weight 1 maxconn 5 check

Server.js

var express = require('express');

var app = express();

var server = require('http').Server(app);

var io = require('socket.io')(server);

var redis = require('socket.io-redis');

io.adapter(redis({ host: ###, port: 6379 }));

server.listen(80); // opsworks node.js server requires port 80

app.get('/', function (req, res) {

res.sendfile('./index.html');

});

io.sockets.on('connection', function (socket) {

socket.on('join', function(room) {

socket.join(room);

});

socket.on('alert', function (room) {

socket.in(room).emit('alert_dashboard');

});

socket.on('event', function(data) {

socket.in(data.room).emit('event_dashboard', data);

});

});

Client

var socket = io.connect('http://haproxy_server_ip:80');

socket.on('connect', function() {

socket.emit('join', room id #);

});