I have a small VPS setup with nginx. I want to squeeze as much performance as possible out of it, so I've been experimenting with optimization and load testing.

I'm using Blitz.io to do load testing by GETing a small static text file, and running into an odd issue where the server appears to be sending TCP resets once the number of simultaneous connections reaches roughly 2000. I know this is a very large amount, but from using htop the server still has plenty to spare in CPU time and memory, so I would like to figure out the source of this issue to see if I can push it even further.

I am running Ubuntu 14.04 LTS (64-bit) on a 2GB Linode VPS.

I don't have enough reputation to post this graph directly, so here's a link to the Blitz.io graph:

Here are things I've done to try and figure out the source of the issue:

- The nginx config value

worker_rlimit_nofileis set to 8192 - have

nofileset to 64000 for both hard and soft limits forrootandwww-datauser (what nginx runs as) in/etc/security/limits.conf there are no indications anything is going wrong in

/var/log/nginx.d/error.log(typically, if you are running into file descriptor limits, nginx will print error messages saying so)I have ufw setup, but no rate limiting rules. The ufw log indicates nothing is being blocked and I have tried disabling ufw with the same result.

- There are no indicative errors in

/var/log/kern.log - There are no indicative errors in

/var/log/syslog I have added the following values to

/etc/sysctl.confand loaded them withsysctl -pwith no effect:net.ipv4.tcp_max_syn_backlog = 1024 net.core.somaxconn = 1024 net.core.netdev_max_backlog = 2000

Any ideas?

EDIT: I did a new test, ramping to 3000 connections on a very small file (only 3 bytes). Here's the Blitz.io graph:

Again, according to Blitz all of these errors are "TCP Connection reset" errors.

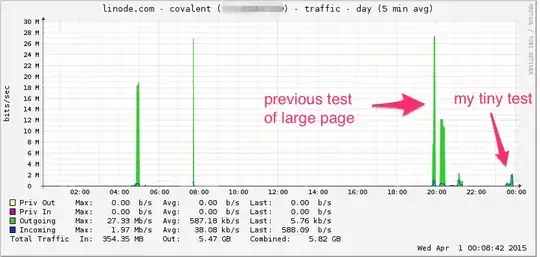

Here's the Linode bandwidth graph. Keep in mind this is a 5 minute average so it's low pass filtered a bit (instantaneous bandwidth is probably much higher), but still, this is nothing:

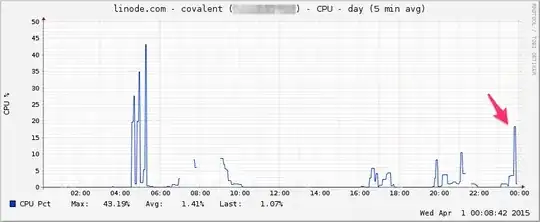

CPU:

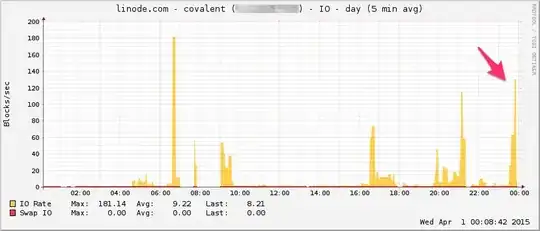

I/O:

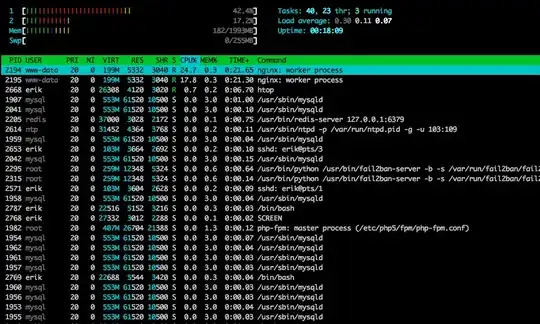

Here's htop near the end of the test:

I also captured some of the traffic using tcpdump on a different (but similar-looking) test, starting the capture when the errors started coming in:

sudo tcpdump -nSi eth0 -w /tmp/loadtest.pcap -s0 port 80

Here's the file if anyone wants to take a look at it (~20MB): https://drive.google.com/file/d/0B1NXWZBKQN6ETmg2SEFOZUsxV28/view?usp=sharing

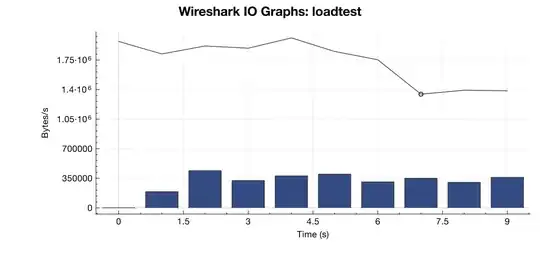

Here's a bandwidth graph from Wireshark:

(Line is all packets, blue bars are TCP errors)

(Line is all packets, blue bars are TCP errors)

From my interpretation of the capture (and I'm no expert), it looks like the TCP RST flags are coming from the load testing source, not the server. So, assuming that something isn't wrong on the load testing service's side, is it safe to assume that this is the result of some sort of network management or DDOS mitigation between the load testing service and my server?

Thanks!