I am using a baseimage and based on that creating many VMs. And now I want to know which is better, qcow2 or raw to use for a baseimage. Moreover, can you please tell me if there is any advantage of using this baseimage thing, instead of cloning the whole disk. Speed can be one factor but in term of efficiency is there any problem in using a baseimage and then creating VMs using that baseimage ?

Edit 1:

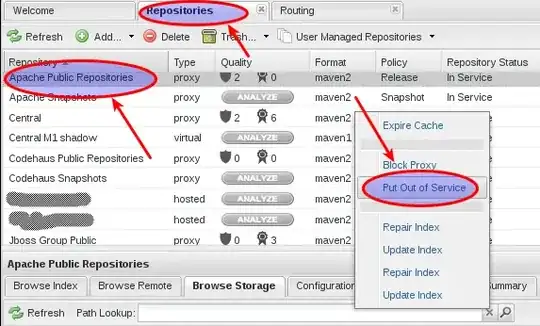

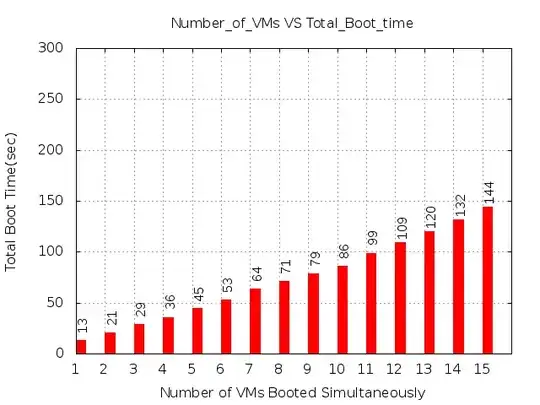

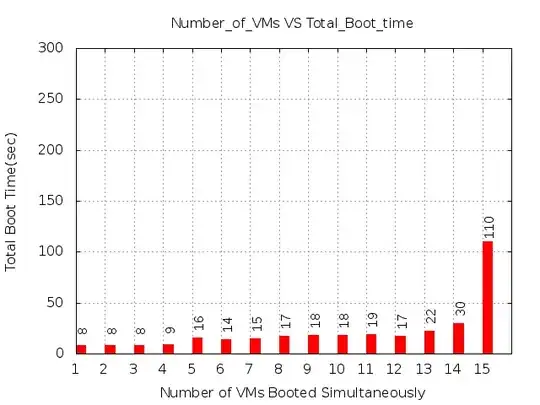

I performed some experiments and got

First one is when both baseimage and overlay are qcow2. Second When baseimage is raw but the overlay is qcow2 and in third case I am giving individual raw disk image to each VM. Surprisingly, last case is much more efficient as compared to the other two.

Experimental Setup: OS in baseimage : Ubuntu Server 14.04 64 bit. Host OS: Ubuntu 12.04 64bit RAM : 8GB Processor : Intel® Core™ i5-4440 CPU @ 3.10GHz × 4 Disk : 500 GB

On x-axis : Number of VM booted simultaneously. Starting from 1 and incremented upto 15.

On y-axis : Total Time to boot "x" number of machines.

From the graphs, it seems that giving full disk image to VM is much more efficient then other 2 methods.

Edit 2:

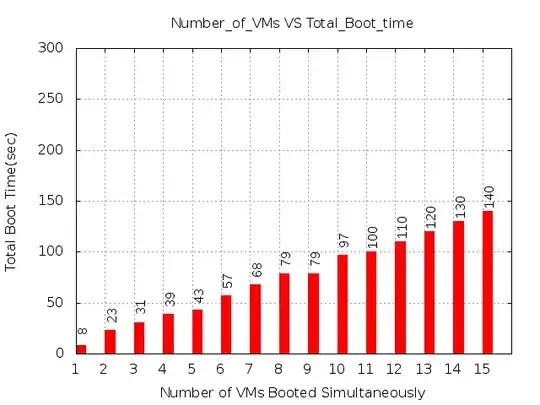

This is for the case when we are giving individual raw image to each VM. After doing cache flushing, this is the graph. It is almost similar to the raw baseimage + qcow overlay.

Thanks.