1. Create a new ebs volume and attach it to the instance.

Create a new EBS volume. For example, if you originally had 20G, and you want to shirnk it to 8G, then create a new 8G EBS volume,be sure to be in the same availability zone. Attach it to the instance which you need to shrink the root partition of.

2. Partition, format, and synchronize files on the newly created ebs volume.

(1. Check the partition situation

We first use the command sudo parted -l to check the partition information of original volume:

[root@ip-172-31-16-92 conf.d]# sudo parted -l

Model: NVMe Device (nvme)

Disk /dev/nvme0n1: 20G

Sector size (logical/physical): 512B/512B

Partition Table: gpt

Disk Flags:

Number Start End Size File system Name Flags

1 1049kB 2097kB 1049kB bbp bios_grub

2 2097kB 20480MB 24G xfs root

It can be seen that this 20G root device volume is parted into two partitions, one is named bbp and the other is root. There is no file system in bbp partition, but there is a flag named bios_grub,which shows that this system is booted by grub.Also, It shows that the root volume is partitioned using gpt.

As for what is bios_grub, it is actually the BIOS boot partition. The reference is as follows:

https://en.wikipedia.org/wiki/BIOS_boot_partition

https://www.cnblogs.com/f-ck-need-u/p/7084627.html

This is about 1MB, and there is a partition called root that we need to focus on. This partition stores all the files of the original system.

So, the idea of backup is that to transfer files from this partition to another smaller partition on the new ebs volume.

(2 Use

parted to partition and format the new ebs volume.

Use lsblk to list the block:

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

nvme0n1 259:0 0 20G 0 disk

├─nvme0n1p1 259:1 0 1M 0 part

└─nvme0n1p2 259:2 0 20G 0 part /

nvme1n1 270:0 0 8G 0 disk

The new ebs volume is the device nvme1n1, and we need to partition it.

~# parted /dev/nvme1n1

GNU Parted 3.2

Using /dev/xvdg

Welcome to GNU Parted! Type 'help' to view a list of commands.

(parted) mklabel gpt #Using the gpt layout would take up the first 1024 sectors

(parted) mkpart bbp 1MB 2MB # Since the first 1024 sectors are used, the start address here is 1024kb or 1MB, and bbp is the partition name, that is, BIOS boot partition, which needs to take up 1MB, so the end address is 2MB

(parted) set 1 bios_grub on #Set partition 1 as BIOS boot partition

(parted) mkpart root xfs 2MB 100% #allocate the remaining space (2MB to 100%) to the root partition.

After partitioning, use lsblk again, we can see

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

nvme0n1 259:0 0 20G 0 disk

├─nvme0n1p1 259:1 0 1M 0 part

└─nvme0n1p2 259:2 0 20G 0 part /

nvme1n1 270:0 0 8G 0 disk

├─nvme1n1p1 270:1 0 1M 0 part

└─nvme1n1p2 270:2 0 8G 0 part /

You can see that there are two more partitions, nvme1n1p1 and nvme1n1p2, where nvme1n1p2 is our new root partition.

Use the following command to format the partition:

mkfs.xfs /dev/nvme1n1p2

After formatting, we need to mount the partition, for example, we mount it to /mnt/myroot.

mkdir -p /mnt/myroot

mount /dev/nvme1n1p2 /mnt/myroot

(3 Use rsync to transfer the all content to the corresponding root partition of the new volume.

sudo rsync -axv / /mnt/myroot/

Note that the above -x parameter is very important, because it is to back up the root directory of the current instance,

So if you don't add this parameter, it will back up /mnt/myroot itself to /mnt/myroot and fall into an endless loop.(–exclude parameter is also ok)

The rsync command is different from the cp command. The cp command will overwrite, while the rsync is a synchronous incremental backup. It would save a lot of time.

Take a coffee and Wait to complete the synchronization.

3.Replace the uuid in the corresponding file.

Because the volume has changed,So the uuid of volume is also changed. We beed to replace the uuid in the boot files.

The following two files need to be modified:

/boot/grub2/grub.cfg #or /boot/grub/grub.cfg

/etc/fstab

So what needs to be changed? First, you need to list the uuid of the relevant volume through blkid:

[root@ip-172-31-16-92 boot]# sudo blkid

/dev/nvme0n1p2: LABEL="/" UUID="add39d87-732e-4e76-9ad7-40a00dbb04e5" TYPE="xfs" PARTLABEL="Linux" PARTUUID="47de1259-f7c2-470b-b49b-5e054f378a95"

/dev/nvme1n1p2: UUID="566a022f-4cda-4a8a-8319-29344c538da9" TYPE="xfs" PARTLABEL="root" PARTUUID="581a7135-b164-4e9a-8ac4-a8a17db65bef"

/dev/nvme0n1: PTUUID="33e98a7e-ccdf-4af7-8a35-da18e704cdd4" PTTYPE="gpt"

/dev/nvme0n1p1: PARTLABEL="BIOS Boot Partition" PARTUUID="430fb5f4-e6d9-4c53-b89f-117c8989b982"

/dev/nvme1n1: PTUUID="0dc70bf8-b8a8-405c-93e1-71c3b8a887c7" PTTYPE="gpt"

/dev/nvme1n1p1: PARTLABEL="bbp" PARTUUID="82075e65-ae7c-4a90-90a1-ea1a82a52f93"

You can see that the uuid of the root partition of the old large EBS volume is add39d87-732e-4e76-9ad7-40a00dbb04e5, and the uuid of the new small EBS volume is 566a022f-4cda-4a8a-8319-29344c538da9. Use the sed command to replace it:

sed 's/add39d87-732e-4e76-9ad7-40a00dbb04e5/566a022f-4cda-4a8a-8319-29344c538da9/g' /boot/grub2/grub.cfg

sed 's/add39d87-732e-4e76-9ad7-40a00dbb04e5/566a022f-4cda-4a8a-8319-29344c538da9/g' /etc/fstab

Of course, you could also try to manually generate grub files using grub-install (In some systems are grub2-install) here just for convenience.

4.Detach two volumes then re-attach the new small volume.

Then use umount to unmount the new ebs volume:

umount /mnt/myroot/

If it prompts that target is busy. You can use fuser -mv /mnt/myroot to see which process is working in it. What I found is bash, which means that you have to exit this directory in bash. Use cd to return to the home directory and enter the command above again to umount.

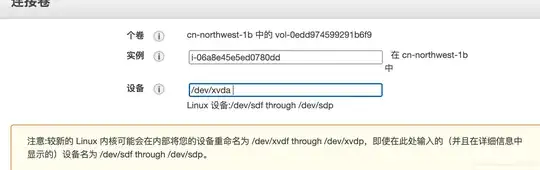

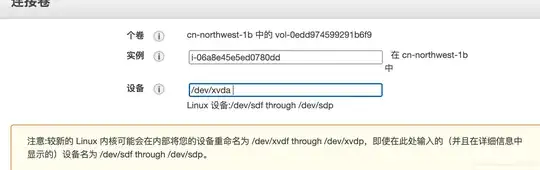

Then detach both two volumed(Stop the instance first, of course) ,and re-attach the new volume as the root device by filling in the device name here./dev/xvda as shown below

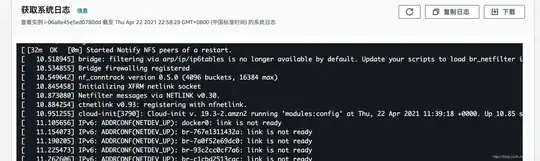

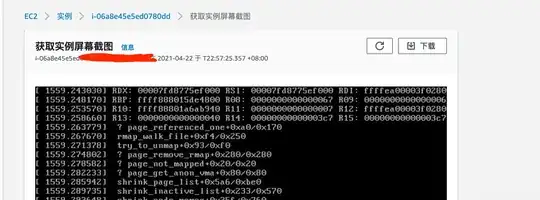

Then start the instance.If the ssh is failed, you can use the following methods to debug:

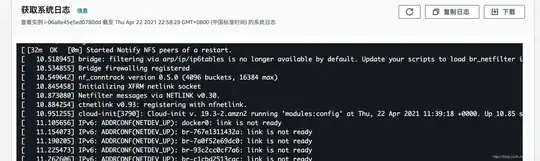

1. get system log

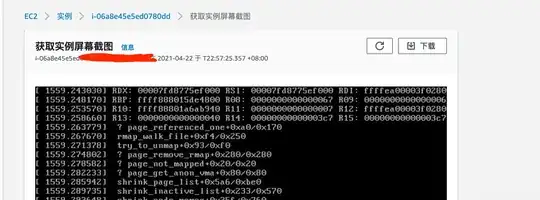

2.get screenshot

Reference:

1.https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/TroubleshootingInstances.html#InitialSteps

2.https://www.daniloaz.com/en/partitioning-and-resizing-the-ebs-root-volume-of-an-aws-ec2-instance/

3.https://medium.com/@m.yunan.helmy/decrease-the-size-of-ebs-volume-in-your-ec2-instance-ea326e951bce