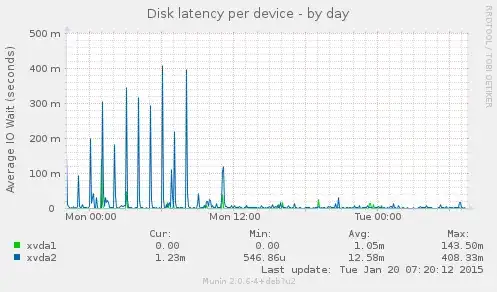

I have a server (Debian 6 LTS) with a 3Ware 9650 SE RAID controller. There are two arrays, one RAID1, one RAID6. It runs Xen 4.0, with about 18 DomUs. The problem is that I experience that IO tasks very easily starve each other. It happens when one DomU generates a lot of IO, blocking the others for minutes at a time, but it also just happened while dd'ing.

To move a DomU off a busy RAID array, I used dd. While doing that, not only did my Nagios report other VMs to be unresponsive, I got this notice on the Dom0:

[2015-01-14 00:38:07] INFO: task kdmflush:1683 blocked for more than 120 seconds.

[2015-01-14 00:38:07] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[2015-01-14 00:38:07] kdmflush D 0000000000000002 0 1683 2 0x00000000

[2015-01-14 00:38:07] ffff88001fd37810 0000000000000246 ffff88001f742a00 ffff8800126c4680

[2015-01-14 00:38:07] ffff88000217e400 00000000aae72d72 000000000000f9e0 ffff88000e65bfd8

[2015-01-14 00:38:07] 00000000000157c0 00000000000157c0 ffff880002291530 ffff880002291828

[2015-01-14 00:38:07] Call Trace:

[2015-01-14 00:38:07] [<ffffffff8106ce4e>] ? timekeeping_get_ns+0xe/0x2e

[2015-01-14 00:38:07] [<ffffffff8130deb2>] ? io_schedule+0x73/0xb7

[2015-01-14 00:38:07] [<ffffffffa0175bd6>] ? dm_wait_for_completion+0xf5/0x12a [dm_mod]

[2015-01-14 00:38:07] [<ffffffff8104b52e>] ? default_wake_function+0x0/0x9

[2015-01-14 00:38:07] [<ffffffffa01768c3>] ? dm_flush+0x1b/0x59 [dm_mod]

[2015-01-14 00:38:07] [<ffffffffa01769b9>] ? dm_wq_work+0xb8/0x167 [dm_mod]

[2015-01-14 00:38:07] [<ffffffff81062cfb>] ? worker_thread+0x188/0x21d

[2015-01-14 00:38:07] [<ffffffffa0176901>] ? dm_wq_work+0x0/0x167 [dm_mod]

[2015-01-14 00:38:07] [<ffffffff81066336>] ? autoremove_wake_function+0x0/0x2e

[2015-01-14 00:38:07] [<ffffffff81062b73>] ? worker_thread+0x0/0x21d

[2015-01-14 00:38:07] [<ffffffff81066069>] ? kthread+0x79/0x81

[2015-01-14 00:38:07] [<ffffffff81012baa>] ? child_rip+0xa/0x20

[2015-01-14 00:38:07] [<ffffffff81011d61>] ? int_ret_from_sys_call+0x7/0x1b

[2015-01-14 00:38:07] [<ffffffff8101251d>] ? retint_restore_args+0x5/0x6

[2015-01-14 00:38:07] [<ffffffff81012ba0>] ? child_rip+0x0/0x20

I tried both deadline and cfq schedulers. With the CFQ, it doesn't make a DomU more responsive if I set the blkback backend processes to realtime IO priority.

I gave the Dom0 a sched-cred of 10000, since it needs a higher weight to serve all the IO of the DomU's (and in my case doesn't do much else). But whatever I set that to, it should not influence the dd command and the kdmflush it blocked, since that is all Dom0.

This is the tw_cli output (just had a broken disk, hence the initializing. It's unrelated, because the problem has existed for a long time):

Unit UnitType Status %RCmpl %V/I/M Stripe Size(GB) Cache AVrfy

------------------------------------------------------------------------------

u0 RAID-6 INITIALIZING - 89%(A) 256K 5587.9 RiW ON

u2 RAID-1 OK - - - 1862.63 RiW ON

VPort Status Unit Size Type Phy Encl-Slot Model

------------------------------------------------------------------------------

p1 OK u0 1.82 TB SATA 1 - WDC WD2000FYYZ-01UL

p2 OK u0 1.82 TB SATA 2 - ST32000542AS

p3 OK u0 1.82 TB SATA 3 - WDC WD2002FYPS-02W3

p4 OK u0 1.82 TB SATA 4 - ST32000542AS

p5 OK u0 1.82 TB SATA 5 - WDC WD2003FYYS-02W0

p6 OK u2 1.82 TB SATA 6 - WDC WD2002FYPS-02W3

p7 OK u2 1.82 TB SATA 7 - WDC WD2002FYPS-02W3

Name OnlineState BBUReady Status Volt Temp Hours LastCapTest

---------------------------------------------------------------------------

bbu On Yes OK OK OK 0 xx-xxx-xxxx

I really find this bizar and annoying. I have a feeling this is a quirk of the RAID controller. Other machines with software RAID perform much better.

I hope anyone can enlighten me.