Disclaimer: No offense, but this is a really bad idea. I do not recommend that anyone do this in real life.

But if you give a bored IT guy a lab, funny things will happen!

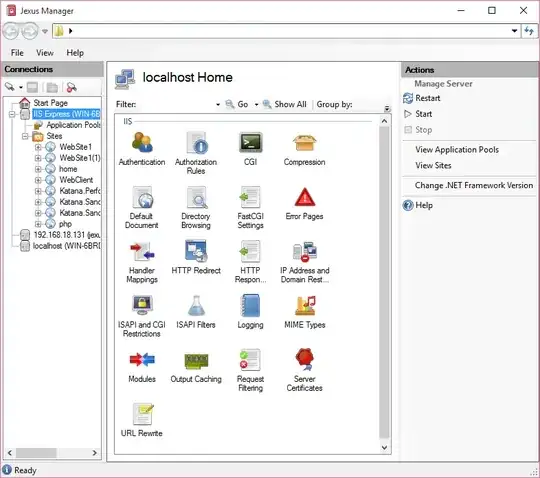

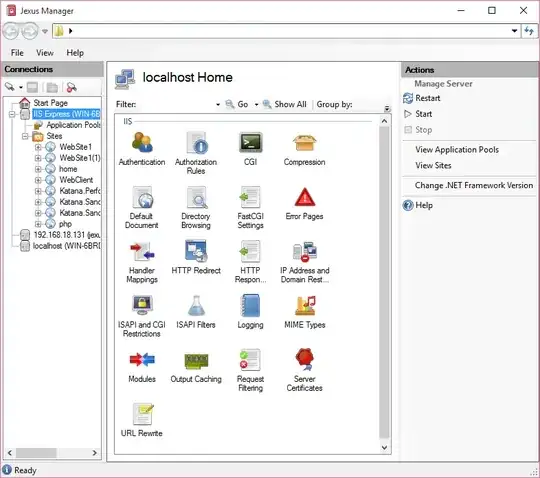

For this experiment, I used a Microsoft DNS server running on Server 2012 R2. Because of the complications of hosting a DNS zone in Active Directory, I created a new primary zone named testing.com that is not AD-integrated.

Using this script:

$Count = 1

for ($x = 1; $x -lt 256; $x++)

{

for ($y = 1; $y -lt 256; $y++)

{

for ($z = 1; $z -lt 256; $z++)

{

Write-Host "1.$x.$y.$z`t( $Count )"

$Count++

dnscmd . /RecordAdd testing.com testing A 1.$x.$y.$z

}

}

}

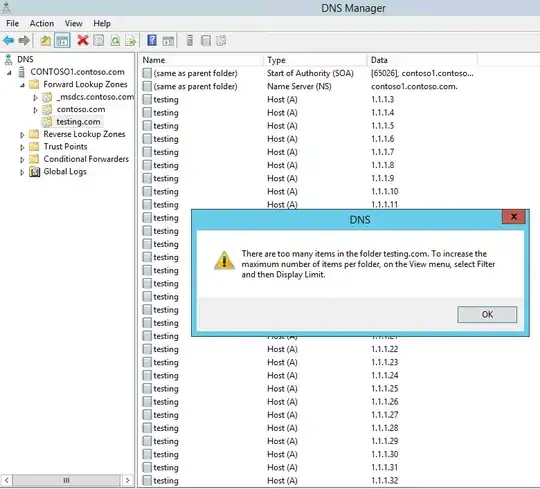

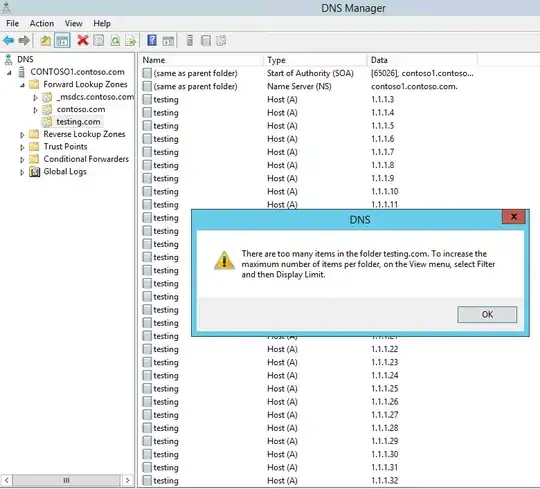

I proceeded to create, without error, 65025 host records for the name testing.testing.com., with literally every IPv4 address from 1.1.1.1 to 1.1.255.255.

Then, I wanted to make sure that I could break through 65536 (2^16 bit) total number of A records without error, and I could, so I assume I probably could have gone all the way to 16581375 (1.1.1.1 to 1.255.255.255,) but I didn't want to sit here and watch this script run all night.

So I think it's safe to say that there's no practical limit to the number of A records you can add to a zone for the same name with different IPs on your server.

But will it actually work from a client's perspective?

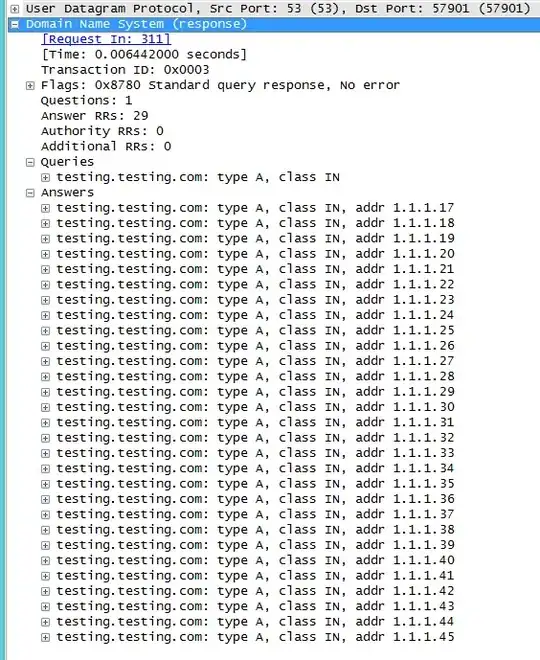

Here is what I get from my client as viewed by Wireshark:

(Open the image in a new browser tab for full size.)

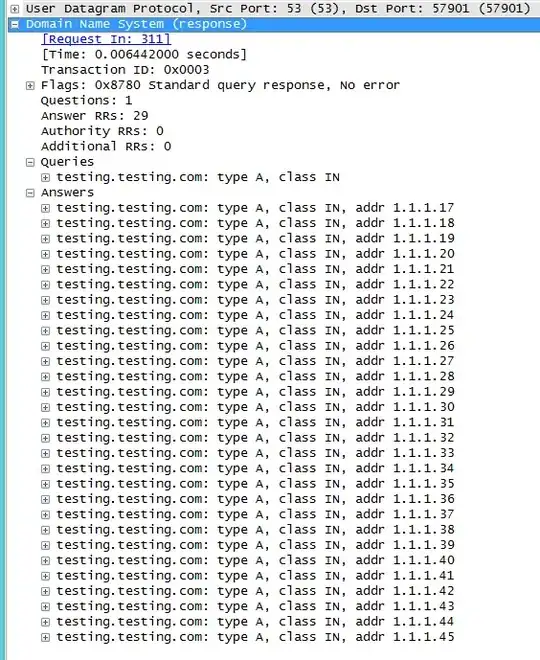

(Open the image in a new browser tab for full size.)

As you can see, when I use nslookup or ping from my client, it automatically issues two queries - one UDP and one TCP. As you already know, the most I can cram into a UDP datagram is 512 bytes, so once that limit is exceeded (like 20-30 IP addresses,) one must use TCP instead. But even with TCP, I only get a very small subset of A records for testing.testing.com. 1000 records were returned per TCP query. The list of A records rotates by 1 properly with each successive query, exactly like how you would expect round-robin DNS to work. It would take millions of queries to round robin through all of these.

I don't see how this is going to help you make your massively scalable, resilient social media network, but there's your answer nevertheless.

Edit: In your follow-up comment, you ask why I think this is generally a bad idea.

Let's say I am an average internet user, and I would like to connect to your service. I type www.bozho.biz into my web browser. The DNS client on my computer gets back 1000 records. Well, bad luck, the first 30 records in the list are non-responsive because the list of A records isn't kept up to date, or maybe there's a large-scale outage affecting a chunk of the internet. Let's say my web browser has a time-out of 5 seconds per IP before it moves on and tries the next one. So now I am sitting here staring at a spinning hourglass for 2 and a half minutes waiting for your site to load. Ain't nobody got time for that. And I'm just assuming that my web browser or whatever application I use to access your service is even going to attempt more than the first 4 or 5 IP addresses. It probably won't.

If you used automatic scavenging and allow non-validated or anonymous updates to the DNS zone in the hopes of keeping the list of A records fresh... just imagine how insecure that would be! Even if you engineered some system where the clients needed a client TLS certificate that they got from you beforehand in order to update the zone, one compromised client anywhere on the planet is going to start a botnet and destroy your service. Traditional DNS is precariously insecure as it is, without crowd-sourcing it.

Humongous bandwidth usage and waste. If every DNS query requires 32 kilobytes or more of bandwidth, that's not going to scale well at all.

DNS round-robin is no substitute for proper load balancing. It provides no way to recover from one node going down or becoming unavailable in the middle of things. Are you going to instruct your users to do an ipconfig/flushdns if the node they were connected to goes down? These sorts of issues have already been solved by things like GSLB and Anycast.

Etc.

(Open the image in a new browser tab for full size.)

(Open the image in a new browser tab for full size.)