I develop a simple REST-style web application consisting of 2 basic modules.

Module#1: server exposing REST web services, stateless, deployed in Tomcat

Module#2: REST client

There is one Tomcat instance with the Module#1 deployed.

I would like to scale it horizontally and run the second Tomcat on the second machine. I'm a complete novice when it comes to load-balancing/clustering that's why I need help. There's no need for session replication and failover.

How should I approach it?

I made a research and these are the possible approaches that I see:

1. No cluster, no third party proxy.

I run the second Tomcat on the second machine. Since I have control over both client and server I can provide a very basic algorithm in client side and choose randomly a host, which would be chosen before API call. There would be no need to configure cluster, nor to provide a third party proxy. Are there any potential pitfalls? Is it a correct approach?

2. Tomcat cluster

When it comes to Tomcat cluster configuration, does it mean that there 2 Tomcats running on a separate machines and their configuration says that they are a cluster? Do I need a separate library, tool for that? Is Tomcat enough? Will I have 2 processes running as in the first approach?

3. Tomcat load balancer

What are the differences between the Tomcat cluster and the Tomcat load balancer? Again, do I need a separate library, tool for that? Is Tomcat enough?

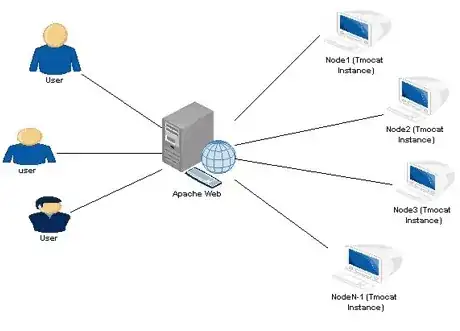

4. Third party proxy

I found some info about things like HAProxy. Does it mean, that all the calls go through it, and the proxy decides which host to choose? Does it mean that apart from the two Tomcat processes there will a third one running separately? On which machine is this proxy running assuming that I have 2 Tomcats on two separate machines?

Which one should I choose? Am I misunderstanding something? Articles, answers appreciated.