A server was recently transported from location A to B, a long journey that took six months. Things have gone wrong due to nothing being labeled prior to shipment. Yes, I know --- it was done by others, but I am paying the price.

I must salvage the data and need help!

The system booted fine previously, but now it won't boot (not even a grub rescue -- the BIOS simply does nothing, and I've tried selecting each individual member of the array).

So I booted from Debian 7 ISO on a USB stick and have gone to work in rescue mode. So far, with little success.

The first thing I noticed was the array was degraded and rebuilding to a spare. It seems this is because one of the original array members is missing.

First, details of the array in its current state, after I booted in rescue mode:

# mdadm --detail /dev/md127

/dev/md127:

Version : 1.2

Creation Time : Wed Nov 7 16:06:02 2012

Raid Level : raid6

Array Size : 26370335232 (25148.71 GiB 27003.22 GB)

Used Dev Size : 2930037248 (2794.30 GiB 3000.36 GB)

Raid Devices : 11

Total Devices : 11

Persistence : Superblock is persistent

Update Time : Sat Sep 13 01:55:51 2014

State : clean, degraded, recovering

Active Devices : 10

Working Devices : 11

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Rebuild Status : 45% complete

Name : media:0

UUID : b1c40379:914e5d18:dddb893b:4dc5a28f

Events : 2216394

Number Major Minor RaidDevice State

0 8 82 0 active sync /dev/sdf2

1 8 97 1 active sync /dev/sdg1

2 8 129 2 active sync /dev/sdi1

3 8 33 3 active sync /dev/sdc1

4 8 161 4 active sync /dev/sdk1

12 8 192 5 spare rebuilding /dev/sdm

6 8 145 6 active sync /dev/sdj1

7 8 49 7 active sync /dev/sdd1

8 8 65 8 active sync /dev/sde1

10 8 224 9 active sync /dev/sdo

11 8 208 10 active sync /dev/sdn

Now let's try to install grub to /dev/md127.

# grub-install /dev/md127

error: found two disks with the index 9 for RAID md/0.

error: found two disks with the index 9 for RAID md/0.

error: superfluous RAID member (10 found).

error: superfluous RAID member (10 found).

error: found two disks with the index 9 for RAID md/0.

error: found two disks with the index 9 for RAID md/0.

error: superfluous RAID member (10 found).

error: superfluous RAID member (10 found).

error: found two disks with the index 9 for RAID md/0.

error: found two disks with the index 9 for RAID md/0.

error: superfluous RAID member (10 found).

error: superfluous RAID member (10 found).

error: found two disks with the index 9 for RAID md/0.

error: found two disks with the index 9 for RAID md/0.

error: superfluous RAID member (10 found).

error: superfluous RAID member (10 found).

error: found two disks with the index 9 for RAID md/0.

error: found two disks with the index 9 for RAID md/0.

error: superfluous RAID member (10 found).

error: superfluous RAID member (10 found).

Segmentation fault

Yikes, that's not good. What is the deal with these "found two disks with the index" and "superfluous RAID member"? Well it turns out the disks were mixed up and a few extra disks were installed into the system because it was unclear if they belonged as RAID members or not.

What happens if we try to install to an individual disk? It seems /dev/sdc is the first member (lowest):

# grub-install /dev/sdc

error: found two disks with the index 9 for RAID md/0.

error: found two disks with the index 9 for RAID md/0.

error: superfluous RAID member (10 found).

error: superfluous RAID member (10 found).

error: found two disks with the index 9 for RAID md/0.

error: found two disks with the index 9 for RAID md/0.

error: superfluous RAID member (10 found).

error: superfluous RAID member (10 found).

error: found two disks with the index 9 for RAID md/0.

error: found two disks with the index 9 for RAID md/0.

error: superfluous RAID member (10 found).

error: superfluous RAID member (10 found).

error: found two disks with the index 9 for RAID md/0.

error: found two disks with the index 9 for RAID md/0.

error: superfluous RAID member (10 found).

error: superfluous RAID member (10 found).

error: found two disks with the index 9 for RAID md/0.

error: found two disks with the index 9 for RAID md/0.

error: superfluous RAID member (10 found).

error: superfluous RAID member (10 found).

/usr/sbin/grub-setup: warn: This GPT partition label has no BIOS Boot Partition; embedding won't be possible!.

/usr/sbin/grub-setup: error: embedding is not possible, but this is required for cross-disk install.

OK, now I am starting to get nervous. I also tried other member disks, such as the last disk sdm:

# grub-install /dev/sdm

error: found two disks with the index 9 for RAID md/0.

error: found two disks with the index 9 for RAID md/0.

error: superfluous RAID member (10 found).

error: superfluous RAID member (10 found).

error: found two disks with the index 9 for RAID md/0.

error: found two disks with the index 9 for RAID md/0.

error: superfluous RAID member (10 found).

error: superfluous RAID member (10 found).

error: found two disks with the index 9 for RAID md/0.

error: found two disks with the index 9 for RAID md/0.

error: superfluous RAID member (10 found).

error: superfluous RAID member (10 found).

error: found two disks with the index 9 for RAID md/0.

error: found two disks with the index 9 for RAID md/0.

error: superfluous RAID member (10 found).

error: superfluous RAID member (10 found).

error: found two disks with the index 9 for RAID md/0.

error: found two disks with the index 9 for RAID md/0.

error: superfluous RAID member (10 found).

error: superfluous RAID member (10 found).

/usr/sbin/grub-setup: error: unable to identify a filesystem in hd12; safety check can't be performed.

Now we get another error, unable to identify a filesystem. The filesystem BTW is XFS for this mdadm array, and it is functioning fine (thankfully).

# mdadm --examine /dev/sd?

/dev/sda:

MBR Magic : aa55

Partition[0] : 15633380 sectors at 13340 (type 0c)

/dev/sdb:

Magic : a92b4efc

Version : 1.2

Feature Map : 0x0

Array UUID : b1c40379:914e5d18:dddb893b:4dc5a28f

Name : media:0

Creation Time : Wed Nov 7 16:06:02 2012

Raid Level : raid6

Raid Devices : 10

Avail Dev Size : 5860271024 (2794.40 GiB 3000.46 GB)

Array Size : 23440297984 (22354.41 GiB 24002.87 GB)

Used Dev Size : 5860074496 (2794.30 GiB 3000.36 GB)

Data Offset : 262144 sectors

Super Offset : 8 sectors

State : clean

Device UUID : 8042b1e3:d9e305aa:f53be8b4:b74cc247

Update Time : Mon Nov 18 18:05:25 2013

Checksum : 5762ae4a - correct

Events : 2197822

Layout : left-symmetric

Chunk Size : 512K

Device Role : Active device 9

Array State : AAAAAAAAAA ('A' == active, '.' == missing)

/dev/sdc:

MBR Magic : aa55

Partition[0] : 4294967295 sectors at 1 (type ee)

/dev/sdd:

MBR Magic : aa55

Partition[0] : 4294967295 sectors at 1 (type ee)

/dev/sde:

MBR Magic : aa55

Partition[0] : 4294967295 sectors at 1 (type ee)

/dev/sdf:

MBR Magic : aa55

Partition[0] : 4294967295 sectors at 1 (type ee)

/dev/sdg:

MBR Magic : aa55

Partition[0] : 4294967295 sectors at 1 (type ee)

/dev/sdh:

MBR Magic : aa55

Partition[0] : 4294967295 sectors at 1 (type ee)

/dev/sdi:

MBR Magic : aa55

Partition[0] : 4294967295 sectors at 1 (type ee)

/dev/sdj:

MBR Magic : aa55

Partition[0] : 4294967295 sectors at 1 (type ee)

/dev/sdk:

MBR Magic : aa55

Partition[0] : 4294967295 sectors at 1 (type ee)

mdadm: No md superblock detected on /dev/sdl.

/dev/sdm:

Magic : a92b4efc

Version : 1.2

Feature Map : 0x2

Array UUID : b1c40379:914e5d18:dddb893b:4dc5a28f

Name : media:0

Creation Time : Wed Nov 7 16:06:02 2012

Raid Level : raid6

Raid Devices : 11

Avail Dev Size : 5860271024 (2794.40 GiB 3000.46 GB)

Array Size : 26370335232 (25148.71 GiB 27003.22 GB)

Used Dev Size : 5860074496 (2794.30 GiB 3000.36 GB)

Data Offset : 262144 sectors

Super Offset : 8 sectors

Recovery Offset : 2563782648 sectors

State : clean

Device UUID : c436476d:6e6dbc43:de4e9c83:d697fbf7

Update Time : Sat Sep 13 02:03:42 2014

Checksum : db87180b - correct

Events : 2216444

Layout : left-symmetric

Chunk Size : 512K

Device Role : Active device 5

Array State : AAAAAAAAAAA ('A' == active, '.' == missing)

/dev/sdn:

Magic : a92b4efc

Version : 1.2

Feature Map : 0x0

Array UUID : b1c40379:914e5d18:dddb893b:4dc5a28f

Name : media:0

Creation Time : Wed Nov 7 16:06:02 2012

Raid Level : raid6

Raid Devices : 11

Avail Dev Size : 5860271024 (2794.40 GiB 3000.46 GB)

Array Size : 26370335232 (25148.71 GiB 27003.22 GB)

Used Dev Size : 5860074496 (2794.30 GiB 3000.36 GB)

Data Offset : 262144 sectors

Super Offset : 8 sectors

State : clean

Device UUID : 235097a3:7c8a32b8:f1c73a25:9c149239

Update Time : Sat Sep 13 02:03:42 2014

Checksum : d0b20c55 - correct

Events : 2216444

Layout : left-symmetric

Chunk Size : 512K

Device Role : Active device 10

Array State : AAAAAAAAAAA ('A' == active, '.' == missing)

/dev/sdo:

Magic : a92b4efc

Version : 1.2

Feature Map : 0x0

Array UUID : b1c40379:914e5d18:dddb893b:4dc5a28f

Name : media:0

Creation Time : Wed Nov 7 16:06:02 2012

Raid Level : raid6

Raid Devices : 11

Avail Dev Size : 5860271024 (2794.40 GiB 3000.46 GB)

Array Size : 26370335232 (25148.71 GiB 27003.22 GB)

Used Dev Size : 5860074496 (2794.30 GiB 3000.36 GB)

Data Offset : 262144 sectors

Super Offset : 8 sectors

State : clean

Device UUID : f382773b:08814775:542a5a1e:d2515115

Update Time : Sat Sep 13 02:03:42 2014

Checksum : fa85d548 - correct

Events : 2216444

Layout : left-symmetric

Chunk Size : 512K

Device Role : Active device 9

Array State : AAAAAAAAAAA ('A' == active, '.' == missing)

Prior to creating this stackoverflow request, I had run the above mdadm examine command and found that disk /dev/sdl and disk /dev/sdo both showed "active device 9". But disk /dev/sdl was not being used with an old update time. I saved the output:

/dev/sdl:

Magic : a92b4efc

Version : 1.2

Feature Map : 0x0

Array UUID : b1c40379:914e5d18:dddb893b:4dc5a28f

Name : media:0

Creation Time : Wed Nov 7 16:06:02 2012

Raid Level : raid6

Raid Devices : 10

Avail Dev Size : 5860271024 (2794.40 GiB 3000.46 GB)

Array Size : 23440297984 (22354.41 GiB 24002.87 GB)

Used Dev Size : 5860074496 (2794.30 GiB 3000.36 GB)

Data Offset : 262144 sectors

Super Offset : 8 sectors

State : clean

Device UUID : 8e3499b6:b3baae34:af56fde9:f5d7bc87

Update Time : Fri Nov 15 15:52:20 2013

Checksum : 29fed1f5 - correct

Events : 2183610

Layout : left-symmetric

Chunk Size : 512K

Device Role : Active device 9

Array State : AAAAAAAAAA ('A' == active, '.' == missing)

Prior to creating this request, I issued a mdadm --zero-superblock /dev/sdl which was successful and that disk no longer shows as 'active device 9', so there are now only one 'disk 9' members in the mdadm --examine output.

However, the grub-install still complains of "found two disks with index 9".

I could really use some help here, I've spent the last 12 hours googling trying to solve this to no avail. Naturally, there is no backup of this data so it's crucial to salvage the array.

EDIT TO ADD

I noticed a third 'active device 9' and zeroed out that superblock and that eliminated the two index issue, then I very carefully examined and found an extra disk that was an old member, and zeroed out that one as well -- which eliminated the superfluous disk.

Now grub-install doesn't report those errors.

However, it now reports segmentation fault.

# grub-install --recheck /dev/md0

Segmentation fault

So then I installed an old 750GB drive that was never part of any array, and I installed it directly to the motherboard SATA bypassing the LSI 9201 controllers. I used parted and deleted everything then setup a bios_grub partition.

Model: ATA ST3750330AS (scsi)

Disk /dev/sda: 750GB

Sector size (logical/physical): 512B/512B

Partition Table: gpt

Number Start End Size File system Name Flags

1 1049kB 2097kB 1049kB primary bios_grub

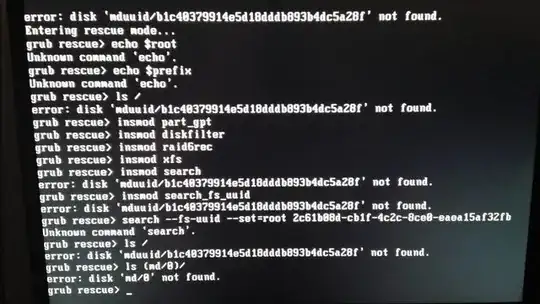

I then installed grub to that device (sda) and rebooted, selected the device in the BIOS. And GRUB dumped into rescue mode reporting "no such disk".

I am not sure what to do next and could use some help! I also want to mention that after a reboot Debian rescue is showing /dev/md/0 and not /dev/md127.

EDIT 2

Still working on this, what I'm planning to do to correct the Segmentation fault is to make all the partitions for each physical disk identical.

So, it looks something like this:

mdadm --manage /dev/md0 --fail /dev/disk

mdadm --manage /dev/md0 --remove /dev/disk

dd if=/dev/zero of=/dev/disk

sgdisk -R /dev/dest /dev/source

sgdisk -G /dev/dest

mdadm --manage /dev/md0 --add /dev/disk

I am using the following partition schema:

# parted /dev/sdc print

Model: ATA WDC WD30EFRX-68A (scsi)

Disk /dev/sdc: 3001GB

Sector size (logical/physical): 512B/4096B

Partition Table: gpt

Number Start End Size File system Name Flags

1 1049kB 2097kB 1049kB bios_grub bios_grub

2 2097kB 3001GB 3001GB raid raid

This means one disk at a time I am removing each member, running the above procedure, then re-adding it and allowing it to sync. This is a large RAID 6 array, each sync takes almost a day, so this will be a long process. But I want to get everything back healthy and perfect, and I think this is my best bet to try and eliminate the segmentation fault.

If anyone has advice please let me know.

EDIT 3

As I replace each disk, I am installing grub to ensure it works successfully. Below are the two error messages I am getting on the current member disks (prior to being replaced) which is why I believe I am receiving the Segmentation fault error:

# grub-install /dev/sdl

/usr/sbin/grub-setup: warn: This GPT partition label has no BIOS Boot Partition; embedding won't be possible!.

/usr/sbin/grub-setup: error: embedding is not possible, but this is required for cross-disk install.

# grub-install /dev/sdn

/usr/sbin/grub-setup: error: unable to identify a filesystem in hd13; safety check can't be performed.

Of course that is repeated many times over on all the old member disks in the array, but it's always one of those two errors. But after I've performed my steps listed above to remove them, setup new partitions, and re-add them to the array, grub does install correctly.

Now it's just a matter of time. I'm updating this article as I go, hoping it will help others, and that in a few days when all disks are replaced I can report success!

EDIT 4

These operations were suggested by a friend. They did not work, I still need help!

I could really use some assistance from anyone/everyone to help me get GRUB working on this box.

Anyone have other suggestions and fixes?

EDIT 5

Grub bug report: