Unfortunately it looks like we may not get to the bottom of what the application was, but to get some value from this incident, I wanted to create a reference answer. This is VMware and virtual layer management centric. A lot of admins are in segregated, and cannot get guest or storage access quickly, and this is for them :)

http://support.seagate.com/kbimg/flash/laptop/Laptop.swf seems to be the closest match to an actual application, which @MosheKatz found.

If this happened in the future, the investigation should be follows like so:

- You notice some but not all VMs have crashed. You suspect this is due to a storage issue (as it usually the most likely cause)

- First try to isolate a common factor. Are all the crashed VMs sharing the same datastore? In this case they were, but some Machines were ok, so we ruled out obvious hardware issues.

- Check all broken VMs to see if there was a common factor (time, function etc). In this case there wasn't.

Check for other unusual events. Something raised a flag here:

- The NFS storage was thin-backed (on the array level). This means that although eg. 200GB is presented to the ESXi hosts, in fact only 100GB is available. Only the array has this knowledge however. What we found was a number of VMs were paused as they had run out of disk space. We though this may have been the root cause, so our fist action was to allocate more storage on the back end, to remove this as a problem.

Once this was resolved (a simple UI change), and the paused VMs were restarting successfully, we returned to the original issue. We mounted the virtual disks from the broken VMs to a working VM, and saw that there was no partition table on the disks. We didn't have a hex viewer available, so had to assume disks were now empty.

The monitoring system alerted to a new VM which just went unresponsive. This was great, as a load of VM's had minutes before just turned un-responsive due to the disk space issue, so the fact this new VM was found quickly was a sign of good monitoring administration.

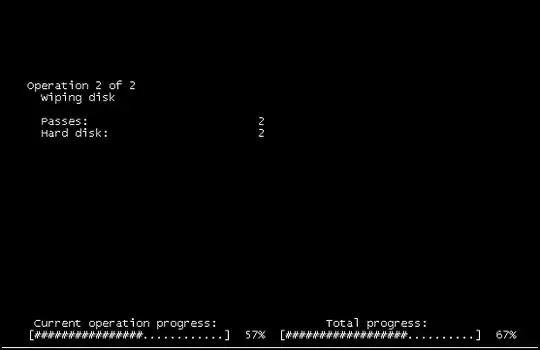

We opened a console and checked the guest, and saw the above screen-grab.

- At this stage I went to the server fault chat room to see if the program could be identified, while my storage colleague checked all virtual layer logs and events, to make sure there was no storage operation running from our area.

- What we should have done was suspend the VM, allow the suspend file to get written out, and analyse the dump to see if the running program could be identified. Suspend VM to core PDF VMware KB

At the end of the day, we knew and Virtual infrastructure tools would not have reported within a guest like the above was doing. We could see there was no ISO mounted, and no events logged against the VM.

We could see the VM wasn't "hard power cycled", only a soft restart (this is invisible to underlying infrastructure).

We knew it wasn't storage side as we had ruled that out already.

We suspected it wasn't automated as it was happening over the course of a few hours on specific VMs.

We guessed it wasn't malicious as why would the console report Disk Wipe if it was :)

So, the conclusion was a user initiated disk wipe.

That's as far as my investigation went, but I hope you found it useful.

Lessons Learnt:

- Backup and test your restores

- Make sure all users, particularity admin users, know they are working in a thin provisioned environment, and should avoid anything like write-out disk formatting (ie. write loads of 1's

- Have a good monitoring system in place.

- And a new one for me: In any large virtual environment, have a tools VM ready, even powered off, with diagnostics tools installed; performance, network storage. If this was available we could have mounted and performed a hex dump on the damaged disk to see if it was really empty, or just missing a mbr. We could have also seen if it was written out with 1's.