Let's say I'm going to build a very large 1PB zpool. I'll have a head unit with the HBAs inside of it (maybe 4 port LSI SAS cards) and I will have perhaps 7 45-drive JBODs attached to the head unit.

The basic way to do this with raidz3 would be create 21 different 15-drive raidz3 vdevs (3 15-drive vdevs for each of the 7 JBODs) and just make a pool out of all 21 of these raidz3 vdevs.

This would work just fine.

The problem here is that if you lose a single vdev for any reason, you lose the entire pool. Which means that you absolutely can never lose an entire JBOD, since that's 3 vdevs lost. BUT, in a mailing list thread, someone cryptically alluded to a way of organizing the disks so that you could indeed lose an entire JBOD. They said:

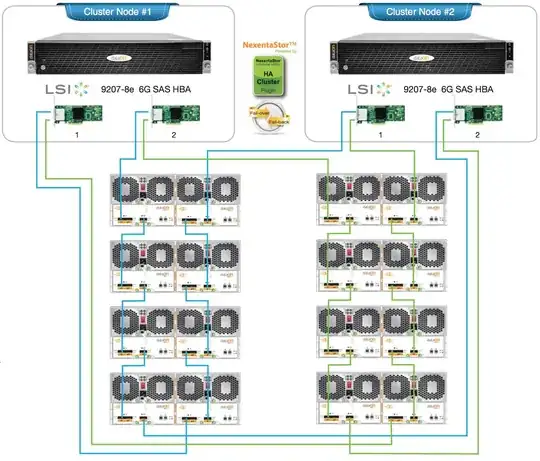

"Using a Dell R720 head unit, plus a bunch of Dell MD1200 JBODs dual pathed to a couple of LSI SAS switches ... We did triple parity and our vdev membership is set up such that we can lose up to three JBODs and still be functional (one vdev member disk per JBOD)."

... and I am not quite sure what they're saying here. I think what they are saying is that instead of having a vdev be (all contiguous 15 (or 12, or whatever) disks on one HBA), you actually have the parity drives for the vdev split into other JBODs, such that you could lose any jbod and you still have N-3 drives elsewhere to cover that vdev...

Or something...

Two questions:

Does anyone know what the recipe for this looks like

Is it complex enough that you really do need a SAS switch, and I couldn't just set it up with complex HBA<-->JBD cabling ?

Thanks.