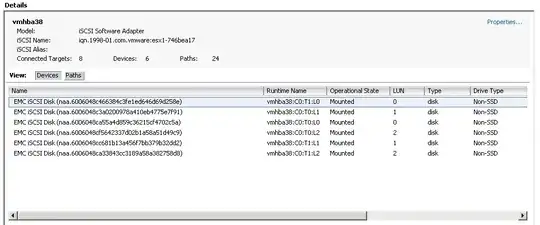

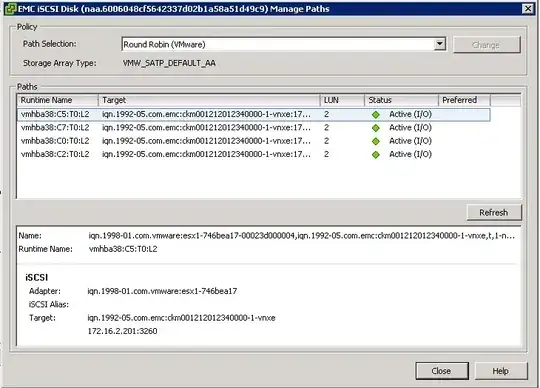

Create a SATP rule for storage vendor named EMC, set the path policy as Round Robine and IOPS from default 1000 to 1. This will be persistence across reboots and anytime a new EMC iSCSI LUNs is presented, this rule will be picked up. For this to apply to existing EMC iSCSI LUNs, reboot the host.

esxcli storage nmp satp rule add --satp="VMW_SATP_DEFAULT_AA" \

--vendor="EMC" -P "VMW_PSP_RR" -O "iops=1"

I've played around with changing the IOPS between 1 - 3 and find performing the best on a single VM. That said, if you have a lot of VM's and a lot of datastores, 1 may not be optimal...

Be sure you have each interface on the VNXe set to 9000 MTU. Also, the vSwitch with your iSCSI interfaces should be set to 9000 MTU along with each VMKernel.

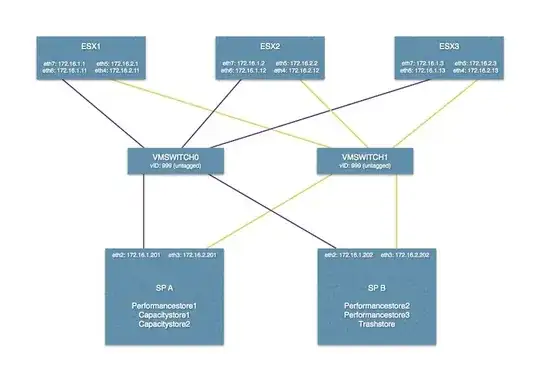

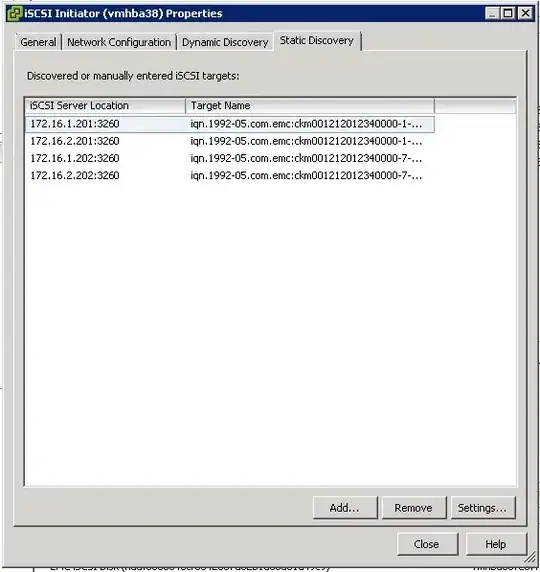

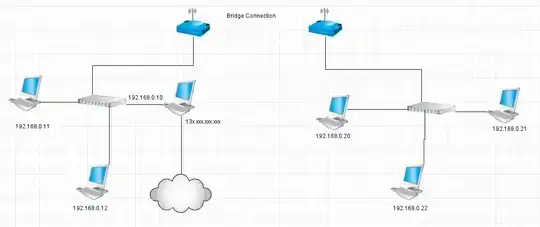

On your VNXe, create two iSCSI Servers - one for SPA and one for SPB. Associate one IP for each initially. Then view details for each iSCSI Server and add additional IPs for each active interface per SP. This will give you the round-robin performance you are looking for.

Then create at minimal two datastores. Associate one datastore with iSCSIServer-SPA and one with iSCSIServer-SPB. This will ensure one of your SP's isn't sitting there at idle.

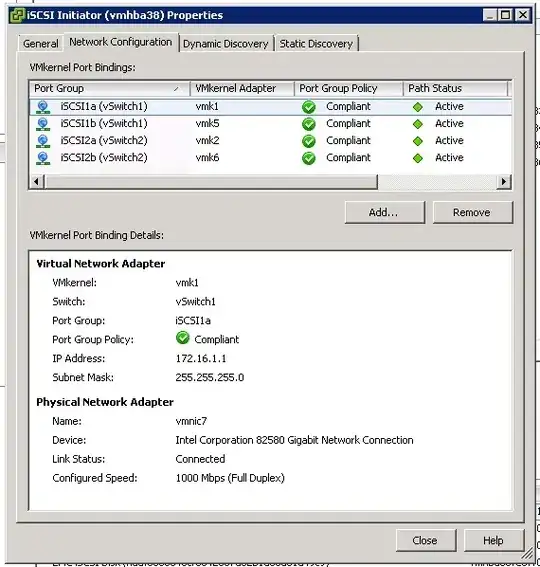

Lastly, all interfaces on the ESX side that are being used for iSCSI should go to a separate vSwitch with all interfaces as active. However, you will want a VMkernel for each interfaces on the ESX side within that designated vSwitch. You must override the vSwitch failover order for each VMKernel to have one Active adapter and all others Unused.

This is my deployment script I used for provisioning ESX hosts. Each host has a total of 8 interfaces, 4 for LAN and 4 for iSCSI/VMotion traffic.

- Perform below configuration

a. # DNS

esxcli network ip dns search add --domain=mydomain.net

esxcli network ip dns server add --server=X.X.X.X

esxcli network ip dns server add --server=X.X.X.X

b. # set hostname update accordingly

esxcli system hostname set --host=server1 --domain=mydomain.net

c. # add uplinks to vSwitch0

esxcli network vswitch standard uplink add --uplink-name=vmnic1 --vswitch-name=vSwitch0

esxcli network vswitch standard uplink add --uplink-name=vmnic4 --vswitch-name=vSwitch0

esxcli network vswitch standard uplink add --uplink-name=vmnic5 --vswitch-name=vSwitch0

d. # create vSwitch1 for storage and set MTU to 9000

esxcli network vswitch standard add --vswitch-name=vSwitch1

esxcli network vswitch standard set --vswitch-name=vSwitch1 --mtu=9000

e. # add uplinks to vSwitch1

esxcli network vswitch standard uplink add --uplink-name=vmnic2 --vswitch-name=vSwitch1

esxcli network vswitch standard uplink add --uplink-name=vmnic3 --vswitch-name=vSwitch1

esxcli network vswitch standard uplink add --uplink-name=vmnic6 --vswitch-name=vSwitch1

esxcli network vswitch standard uplink add --uplink-name=vmnic7 --vswitch-name=vSwitch1

f. # set active NIC for vSwitch0

esxcli network vswitch standard policy failover set --vswitch-name=vSwitch0 --active-uplinks=vmnic0,vmnic1,vmnic4,vmnic5

g. # set active NIC for vSwitch1

esxcli network vswitch standard policy failover set --vswitch-name=vSwitch1 --active-uplinks=vmnic2,vmnic3,vmnic6,vmnic7

h. # create port groups for iSCSI and vmkernels for ESX01 not ESX02

esxcli network vswitch standard portgroup add --portgroup-name=iSCSI-vmnic2 --vswitch-name=vSwitch1

esxcli network ip interface add --interface-name=vmk2 --portgroup-name=iSCSI-vmnic2 --mtu=9000

esxcli network ip interface ipv4 set --interface-name=vmk2 --ipv4=192.158.50.152 --netmask=255.255.255.0 --type=static

vim-cmd hostsvc/vmotion/vnic_set vmk2

esxcli network vswitch standard portgroup add --portgroup-name=iSCSI-vmnic3 --vswitch-name=vSwitch1

esxcli network ip interface add --interface-name=vmk3 --portgroup-name=iSCSI-vmnic3 --mtu=9000

esxcli network ip interface ipv4 set --interface-name=vmk3 --ipv4=192.158.50.153 --netmask=255.255.255.0 --type=static

vim-cmd hostsvc/vmotion/vnic_set vmk3

esxcli network vswitch standard portgroup add --portgroup-name=iSCSI-vmnic6 --vswitch-name=vSwitch1

esxcli network ip interface add --interface-name=vmk6 --portgroup-name=iSCSI-vmnic6 --mtu=9000

esxcli network ip interface ipv4 set --interface-name=vmk6 --ipv4=192.158.50.156 --netmask=255.255.255.0 --type=static

vim-cmd hostsvc/vmotion/vnic_set vmk6

esxcli network vswitch standard portgroup add --portgroup-name=iSCSI-vmnic7 --vswitch-name=vSwitch1

esxcli network ip interface add --interface-name=vmk7 --portgroup-name=iSCSI-vmnic7 --mtu=9000

esxcli network ip interface ipv4 set --interface-name=vmk7 --ipv4=192.158.50.157 --netmask=255.255.255.0 --type=static

vim-cmd hostsvc/vmotion/vnic_set vmk7

i. # create port groups for iSCSI and vmkernels for ESX02 not ESX01

esxcli network vswitch standard portgroup add --portgroup-name=iSCSI-vmnic2 --vswitch-name=vSwitch1

esxcli network ip interface add --interface-name=vmk2 --portgroup-name=iSCSI-vmnic2 --mtu=9000

esxcli network ip interface ipv4 set --interface-name=vmk2 --ipv4=192.168.50.162 --netmask=255.255.255.0 --type=static

vim-cmd hostsvc/vmotion/vnic_set vmk2

esxcli network vswitch standard portgroup add --portgroup-name=iSCSI-vmnic3 --vswitch-name=vSwitch1

esxcli network ip interface add --interface-name=vmk3 --portgroup-name=iSCSI-vmnic3 --mtu=9000

esxcli network ip interface ipv4 set --interface-name=vmk3 --ipv4=192.168.50.163 --netmask=255.255.255.0 --type=static

vim-cmd hostsvc/vmotion/vnic_set vmk3

esxcli network vswitch standard portgroup add --portgroup-name=iSCSI-vmnic6 --vswitch-name=vSwitch1

esxcli network ip interface add --interface-name=vmk6 --portgroup-name=iSCSI-vmnic6 --mtu=9000

esxcli network ip interface ipv4 set --interface-name=vmk6 --ipv4=192.168.50.166 --netmask=255.255.255.0 --type=static

vim-cmd hostsvc/vmotion/vnic_set vmk6

esxcli network vswitch standard portgroup add --portgroup-name=iSCSI-vmnic7 --vswitch-name=vSwitch1

esxcli network ip interface add --interface-name=vmk7 --portgroup-name=iSCSI-vmnic7 --mtu=9000

esxcli network ip interface ipv4 set --interface-name=vmk7 --ipv4=192.168.50.167 --netmask=255.255.255.0 --type=static

vim-cmd hostsvc/vmotion/vnic_set vmk7

j. # set active NIC for each iSCSI vmkernel

esxcli network vswitch standard portgroup policy failover set --portgroup-name=iSCSI-vmnic2 --active-uplinks=vmnic2

esxcli network vswitch standard portgroup policy failover set --portgroup-name=iSCSI-vmnic3 --active-uplinks=vmnic3

esxcli network vswitch standard portgroup policy failover set --portgroup-name=iSCSI-vmnic6 --active-uplinks=vmnic6

esxcli network vswitch standard portgroup policy failover set --portgroup-name=iSCSI-vmnic7 --active-uplinks=vmnic7

k. # create port groups

esxcli network vswitch standard portgroup add --portgroup-name=VMNetwork1 --vswitch-name=vSwitch0

esxcli network vswitch standard portgroup add --portgroup-name=VMNetwork2 --vswitch-name=vSwitch0

esxcli network vswitch standard portgroup add --portgroup-name=VMNetwork3 --vswitch-name=vSwitch0

l. # set VLAN to VM port groups

esxcli network vswitch standard portgroup set -p VMNetwork1 --vlan-id ##

esxcli network vswitch standard portgroup set -p VMNetwork2 --vlan-id ##

esxcli network vswitch standard portgroup set -p VMNetwork3 --vlan-id ###

m. # remove default VM portgroup

esxcli network vswitch standard portgroup remove --portgroup-name="VM Network" -v=vSwitch0

n. # enable iSCSI Software Adapter

esxcli iscsi software set --enabled=true

esxcli iscsi networkportal add -A vmhba33 -n vmk2

esxcli iscsi networkportal add -A vmhba33 -n vmk3

esxcli iscsi networkportal add -A vmhba33 -n vmk6

esxcli iscsi networkportal add -A vmhba33 -n vmk7

o. # rename local datastore

hostname > $var=

vim-cmd hostsvc/datastore/rename datastore1 local-$var

p. #Define Native Multi Path Storage Array Type Plugin for EMC VNXe 3300 and tune round-robin IOPS from 1000 to 1

esxcli storage nmp satp rule add --satp="VMW_SATP_DEFAULT_AA" --vendor="EMC" -P "VMW_PSP_RR" -O "iops=1"

q. # refresh networking

esxcli network firewall refresh

vim-cmd hostsvc/net/refresh

- Configure NTP client using vSphere Client for each host

a. Configuration --> Time Configuration --> Properties --> Options --> NTP Settings --> Add --> ntp.mydomain.net --> Check "Restart NTP service to apply changes" --> OK --> wait… -> Select "Start and stop with host" --> OK --> Check "NTP Client Enabled --> OK

Reboot Host

Proceed with EMC VNXe Storage Provisioning, return to this guide when complete

Login to vSphere client per host

Upgrade each Datastore to VMFS-5

a. Configuration --> Storage --> Highlight Datastore --> Upgrade to VMFS-5