I am building an autocomplete functionality for my site and the Google instant results are my benchmark.

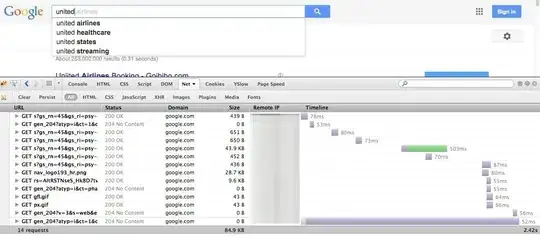

When I look at Google, the 50-60 ms response time baffle me.

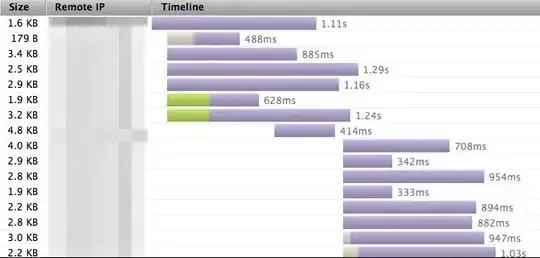

They look insane. In comparison here’s how mine looks like.

To give you an idea my results are cached on the load balancer and served from a machine that has httpd slowstart and initcwnd fixed. My site is also behind cloudflare

From a server side perspective I don’t think I can do anything more.

Can someone help me take this 500 ms response time to 60ms? What more should I be doing to achieve Google level performance?

Edit:

People, you seemed to be angry that I did a comparison to Google and the question is very generic. Sorry about that.

To rephrase: How can I bring down response time from 500 ms to 60 ms provided my server response time is just a fraction of ms. Assume the results are served from Nginx -> Varnish with a cache hit.

Here are some answers I would like to answer myself assume the response sizes remained more or less the same.

- Ensure results are http compressed

- Ensure SPDY if you are on https

- Ensure you have initcwnd set to 10 and disable slow start on linux machines.

- Etc.

I don’t think I’ll end up with 60 ms at Google level but your collective expertise can help easily shave off a 100 ms and that’s a big win.