I'm trying to explain this as simple but documented as possible. This is not exclusive to this server or my current ISP. I've seen the same exact issue over the years while being with different ISPs and having my servers with different providers (GoDaddy in USA, iWeb and GloboTech on Canada). The only thing it's been common is the Windows Server OS (2003 and 2008 r2). But let's look for now at my current server and my current ISP only.

The problem:

I get very slow transfer rates between my local workstation and my remote dedicated server. My server is on a 100 Mbps port and my local workstation is on a 50 Mbps symetric connection over optical fiber.

Symptoms:

Both the server and the workstation get excellent results (very close to their connection speeds) when doing tests on speedtest.net against different servers and locations over the US and Mexico. If I download big files from, let's say, Dropbox, to either my server or my workstation, I get transfer rates of 10 MBps and 5 MBps respectively on a single connection, which is correct according to each connection speed of 100 Mbps and 50 Mbps repectively.

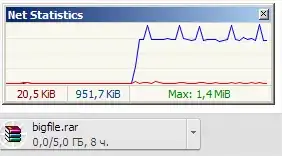

Yet, If I transfer a file from my server (via HTTP or FTP) to my workstation, I don't get even close to the 50 Mbps speed I should get (5 MBps transfer rate) but I get instead something equivalent to 3 Mbps (300 KBps transfer rate).

I'm trying to understand why I get a transfer rate that slow. I'm not sure on how to debug it. Whenever I raise a ticket on the problem with the hosting providers, they ask me for tracert outputs and finally just blame it on some server in the middle. But that doesn't seem to be correct, if we take in consideration what I said at first: I've seen this exact speed/problem while having my servers with GoDaddy, iWeb and GloboTech, and while being myself with different ISPs on very different types of Internet service. It really looks like a fixed setting somewhere in the server area.

Tests I've done:

SPEEDTEST

These are speed tests from speedtest.net that were executed in my dedicated server against different remote servers including a server in my ISP's datacenter in Mexico City:

Canada: 94.64 Mbps for download and 94.87 for upload http://www.speedtest.net/my-result/3470801975

San Jose, CA: 93.58 Mbps for download and 95.48 Mbps for upload http://www.speedtest.net/my-result/3470805341

Mexico City (server in my own ISP's datacanter): 92.99 Mbps for download and 95.39 Mbps for upload http://www.speedtest.net/my-result/3470810269

If I run those tests against the same servers from my local workstation, I get speeds close to my 50 Mbps connection too.

TRACERT

This is a recent tracert output executed from my workstation to my dedicated server:

1 <1 ms <1 ms <1 ms 192.168.7.254

2 2 ms 1 ms 1 ms 10.69.32.1

3 * 3 ms 2 ms 10.5.50.174

4 3 ms 2 ms 2 ms 10.5.50.173

5 * 5 ms 3 ms fixed-203-69-2.iusacell.net [189.203.69.2]

6 32 ms 32 ms 32 ms 8-1-33.ear1.Dallas1.Level3.net [4.71.220.89]

7 33 ms 33 ms 33 ms ae-3-80.edge5.Dallas3.Level3.net [4.69.145.145]

8 33 ms 33 ms 33 ms ae13.dal33.ip4.tinet.net [77.67.71.221]

9 76 ms 76 ms 157 ms xe-1-0-0.mtl10.ip4.tinet.net [89.149.185.41]

10 72 ms 72 ms 72 ms te2-2.cr2.mtl3.gtcomm.net [67.215.0.160]

11 72 ms 72 ms 72 ms ae2.csr2.mtl3.gtcomm.net [67.215.0.134]

12 72 ms 72 ms 73 ms te3-4.dist1.mtl8.gtcomm.net [67.215.0.83]

13 72 ms 72 ms 72 ms ns1.marveldns.com [173.209.57.82]

IPERF

This is a iperf test executed using my dedicated server as server and my workstation as client:

C:\> iperf ns1.marveldns.com

------------------------------------------------------------

Client connecting to ns1.marveldns.com, TCP port 5001

TCP window size: 64.0 KByte (default)

------------------------------------------------------------

[ 3] local 192.168.7.2 port 60339 connected with 173.209.57.82 port 5001

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-10.3 sec 5.62 MBytes 4.59 Mbits/sec

Now, this is a multiple-streams mode iperf test:

C:\> iperf -c ns1.marveldns.com -P 10

------------------------------------------------------------

Client connecting to ns1.marveldns.com, TCP port 5001

TCP window size: 64.0 KByte (default)

------------------------------------------------------------

[ 12] local 192.168.7.2 port 29424 connected with 173.209.57.82 port 5001

[ 11] local 192.168.7.2 port 29423 connected with 173.209.57.82 port 5001

[ 9] local 192.168.7.2 port 29421 connected with 173.209.57.82 port 5001

[ 10] local 192.168.7.2 port 29422 connected with 173.209.57.82 port 5001

[ 8] local 192.168.7.2 port 29420 connected with 173.209.57.82 port 5001

[ 7] local 192.168.7.2 port 29419 connected with 173.209.57.82 port 5001

[ 4] local 192.168.7.2 port 29416 connected with 173.209.57.82 port 5001

[ 6] local 192.168.7.2 port 29418 connected with 173.209.57.82 port 5001

[ 5] local 192.168.7.2 port 29417 connected with 173.209.57.82 port 5001

[ 3] local 192.168.7.2 port 29415 connected with 173.209.57.82 port 5001

[ ID] Interval Transfer Bandwidth

[ 11] 0.0-10.9 sec 1.25 MBytes 959 Kbits/sec

[ 4] 0.0-11.0 sec 1.25 MBytes 956 Kbits/sec

[ 3] 0.0-11.4 sec 768 KBytes 551 Kbits/sec

[ 8] 0.0-11.5 sec 1.00 MBytes 730 Kbits/sec

[ 6] 0.0-11.6 sec 1.12 MBytes 813 Kbits/sec

[ 12] 0.0-11.7 sec 1.12 MBytes 805 Kbits/sec

[ 5] 0.0-11.8 sec 1.25 MBytes 886 Kbits/sec

[ 10] 0.0-11.9 sec 1.12 MBytes 794 Kbits/sec

[ 7] 0.0-12.0 sec 1.12 MBytes 788 Kbits/sec

[ 9] 0.0-12.0 sec 1.12 MBytes 784 Kbits/sec

[SUM] 0.0-12.0 sec 11.1 MBytes 7.75 Mbits/sec

PATHPING

This is the output of a pathping commend executed from my workstation to my dedicated server:

Tracing route to ns1.marveldns.com [173.209.57.82]

over a maximum of 30 hops:

0 ws1 [192.168.7.2]

1 192.168.7.254

2 10.69.32.1

3 * 10.5.50.174

4 10.5.50.173

5 fixed-203-69-2.iusacell.net [189.203.69.2]

6 8-1-33.ear1.Dallas1.Level3.net [4.71.220.89]

7 ae-3-80.edge5.Dallas3.Level3.net [4.69.145.145]

8 ae13.dal33.ip4.tinet.net [77.67.71.221]

9 xe-1-0-0.mtl10.ip4.tinet.net [89.149.185.41]

10 te2-2.cr2.mtl3.gtcomm.net [67.215.0.160]

11 ae2.csr2.mtl3.gtcomm.net [67.215.0.134]

12 te3-4.dist1.mtl8.gtcomm.net [67.215.0.83]

13 ns1.marveldns.com [173.209.57.82]

Computing statistics for 325 seconds...

Source to Here This Node/Link

Hop RTT Lost/Sent = Pct Lost/Sent = Pct Address

0 ws1 [192.168.7.2]

0/ 100 = 0% |

1 0ms 0/ 100 = 0% 0/ 100 = 0% 192.168.7.254

0/ 100 = 0% |

2 1ms 0/ 100 = 0% 0/ 100 = 0% 10.69.32.1

0/ 100 = 0% |

3 3ms 0/ 100 = 0% 0/ 100 = 0% 10.5.50.174

0/ 100 = 0% |

4 2ms 0/ 100 = 0% 0/ 100 = 0% 10.5.50.173

0/ 100 = 0% |

5 4ms 20/ 100 = 20% 20/ 100 = 20% fixed-203-69-2.iusacell.net [189.203.69.2]

0/ 100 = 0% |

6 34ms 0/ 100 = 0% 0/ 100 = 0% 8-1-33.ear1.Dallas1.Level3.net [4.71.220.89]

0/ 100 = 0% |

7 34ms 0/ 100 = 0% 0/ 100 = 0% ae-3-80.edge5.Dallas3.Level3.net [4.69.145.145]

0/ 100 = 0% |

8 33ms 0/ 100 = 0% 0/ 100 = 0% ae13.dal33.ip4.tinet.net [77.67.71.221]

0/ 100 = 0% |

9 79ms 0/ 100 = 0% 0/ 100 = 0% xe-1-0-0.mtl10.ip4.tinet.net [89.149.185.41]

2/ 100 = 2% |

10 73ms 14/ 100 = 14% 12/ 100 = 12% te2-2.cr2.mtl3.gtcomm.net [67.215.0.160]

0/ 100 = 0% |

11 72ms 2/ 100 = 2% 0/ 100 = 0% ae2.csr2.mtl3.gtcomm.net [67.215.0.134]

2/ 100 = 2% |

12 72ms 18/ 100 = 18% 14/ 100 = 14% te3-4.dist1.mtl8.gtcomm.net [67.215.0.83]

0/ 100 = 0% |

13 72ms 4/ 100 = 4% 0/ 100 = 0% ns1.marveldns.com [173.209.57.82]

Trace complete.

Things you can try for yourself

If you want to give it a try, these are a few things I've setup in the server for testing purposes:

Big file on HTTP server

I've placed a 5 GB file in my server that can be downloaded via HTTP. You can find it here: http://www.marveldns.com/transfer_test/

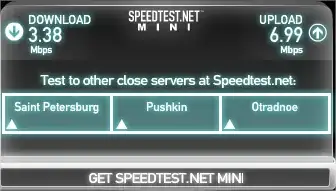

Speedtest MINI app

I've setup a "speedtest mini" test on my server. You can visit it and see what speed it says you are getting for both download and upload within my server and yourself. You can find it here: http://www.marveldns.com/speedtest/

iperf test

I'm leaving a server instance of iperf running. You can try iperf in client mode to the host ns1.marveldns.com.

Finally:

As I said before, I'm trying to get help understanding the whole thing. I'm not expert on TCP/IP or top end networking. I honestly don't even have it clear how to use the results of tracert, iperf or pingpath to be able to resolve the problem, but I include them because I'm always asked for it when I talk about this issue.

If my question lacks of something to be better, please don't just downvote it and let me know what is wrong with it or what else I can add to it to get some help. Thank you.