We use kvm for virtualization, the disk images are stored as logical volumes. The logical volumes are stored on a bunch of software-RAID1 (mdadm) arrays composed of Intel DCS3500 SSDs (each array is a physical volume in the volume group).

If I create a logical volume inside that volume group and use fio to determine IOPS, I get about 40K IOPS for random write 4KB blocks. Great. iostat shows both SSDs utilized to about 100%. If I create a virtual machine that uses the same logical volume for storage and run the same fio command, I initially get 20K IOPS (great), but after 30s or so it drops to 7-8K. The weird thing is that iostat now shows one SSD used at 100%, while the other is only at 45%.

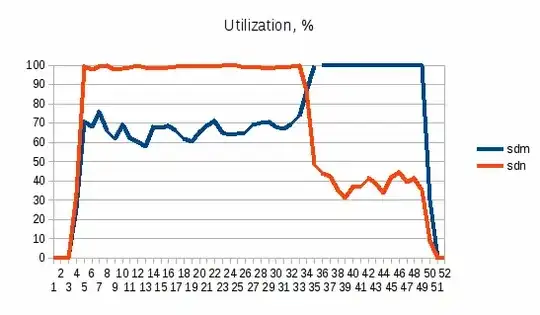

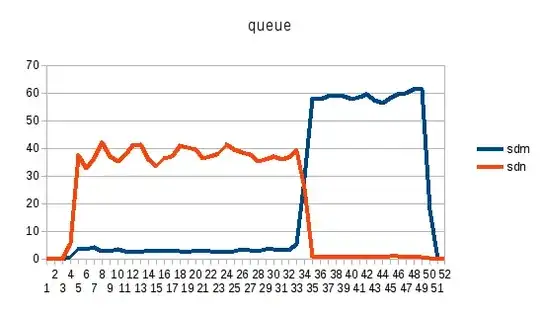

Here are graphs over time (done by iostat -x 2 on the hypervisor)

As you see, at first the bottleneck seems to be sdn, but then sdm starts doing something that drags down the array and now sdn is only half busy.

However, for whatever reason this only happens if the test is run inside the VM. If I run it from the hypervisor everything is OK and the performance does not drop for at least 2 minutes. The choice of the virtual disk driver, ide or virtio does not affect the result.

Has anyone encountered such a problem before? What is the underlying cause? How do I increase the performance?

EDIT: More information as requested (for whatever reason I didn't think of it in the first place)

OS: CentOS 6.4

Kernel: 2.6.32-358.el6.x86_64

mdadm --detail /dev/md104

/dev/md104:

Version : 1.2

Creation Time : Mon Feb 3 20:02:02 2014

Raid Level : raid1

Array Size : 468720320 (447.01 GiB 479.97 GB)

Used Dev Size : 468720320 (447.01 GiB 479.97 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Wed Feb 26 14:50:36 2014

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Name : xxx:104 (local to host xxx)

UUID : aaaaaaaa:bbbbbbbb:cccccccc:dddddddd

Events : 28

Number Major Minor RaidDevice State

0 8 192 0 active sync /dev/sdm

1 8 208 1 active sync /dev/sdn

I have blanked out the uid and the host name.