EDIT 2: My application benefits from hyper-threading

A. Yes I know what the technology is and what it does

B. Yes I know the difference between a physical core and a logical one

C. Yes turning HT off made the render run slower, this is expected!

D. No I am not overprivisoning when I assign all the logical (yes logical) cores to one VM, if you read the white papers from VMWare you will know that the scheduler generates a topology map of the physical hardware and uses that map when allocating resources, assigning ALL the logical cores to one VM generates 16 logical processors in Windows, the same as if I installed the VM on physical hardware. And whoa and behold, after 5 tests this arrangement has produced the fastest (and most efficient) render times.

F. The application in question is 3ds max 2014 using backburner and the Mental Ray renderer.

TL|DR: I (sometimes) want to run one VM on vSphere with as much CPU efficiency as possible, how?

I'm hoping to use VMWare's ESXI / vSphere hypervisor in a bit of a non-standard way.

Normally people use a hypervisor to run multiple VM's simultaneously on one system. I want to use the hypervisior to let me quickly switch between applications, but only ever really run one VM / App at a time.

It's actually a pet project, I have a 5 node renderfarm (ea. node 2x Intel Xeon E5540) that for the most part stays off (when I'm not rendering I have no need to run these machines). It seems like a waste of valuable compute time so I was hoping to use them for other things when not rendering (kind of a general purpose 40 core / 80 thread compute cluster).

I was hoping that vSphere could let me spin up render node VM's when rendering and other things when not. Problem is, I really really need a high efficiency when it comes to CPU when the render VM is running.

I'm using a render job as a benchmark and getting about 88% of the speed on the VM as I can get on a non-VM setup. I was hoping for closer to 95%, any ideas how I could get there?

EDIT: Details:

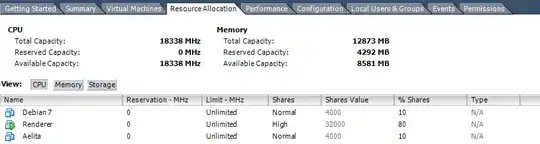

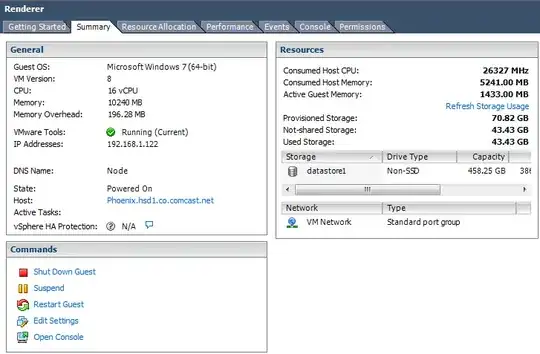

Resources being used by the render VM, I don't fully understand why this bar is not full:

Resource settings for that VM:

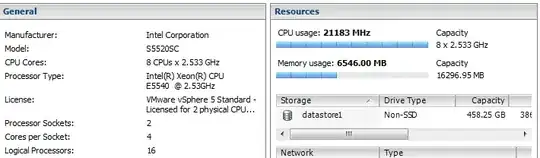

Even though the VM doesn't show as using 100% of the resources, the host does:

I don't entirely understand the % shares here, is this when all these VM's are on? Also I didn't configure the other VM's to reserve 10%:

Finally the host does show as being fully utilized, although not shown here, the MHz utilization is lower (IE not 100%):

VM Config:

I understand this is a interesting case, but nevertheless I feel the question is valid and good and may help others in a similar situation down the line (although I admit this case is quite specific).