Summary: My problem is I cannot use the QNAP NFS Server as an NFS datastore from my ESX hosts despite the hosts being able to ping it. I'm utilising a vDS with LACP uplinks for all my network traffic (including NFS) and a subnet for each vmkernel adapter.

Setup: I'm evaluating vSphere and I've got two vSphere ESX 5.5 hosts (node1 and node2) and each one has 4x NICs. I've teamed them all up using LACP/802.3ad with my switch and then created a distributed switch between the two hosts with each host's LAG as the uplink. All my networking is going through the distributed switch, ideally, I want to take advantage of DRS and the redundancy. I have a domain controller VM ("Central") and vCenter VM ("vCenter") running on node1 (using node1's local datastore) with both hosts attached to the vCenter instance. Both hosts are in a vCenter datacenter and a cluster with HA and DRS currently disabled. I have a

QNAP TS-669 Pro (Version 4.0.3) (TS-x69 series is on VMware Storage HCL) which I want to use as the NFS server for my NFS datastore, it has 2x NICs teamed together using 802.3ad with my switch.

vmkernel.log: The error from the host's vmkernel.log is not very useful:

NFS: 157: Command: (mount) Server: (10.1.2.100) IP: (10.1.2.100) Path: (/VM) Label (datastoreNAS) Options: (None) cpu9:67402)StorageApdHandler: 698: APD Handle 509bc29f-13556457 Created with lock[StorageApd0x411121]

cpu10:67402)StorageApdHandler: 745: Freeing APD Handle [509bc29f-13556457]

cpu10:67402)StorageApdHandler: 808: APD Handle freed!

cpu10:67402)NFS: 168: NFS mount 10.1.2.100:/VM failed: Unable to connect to NFS server.

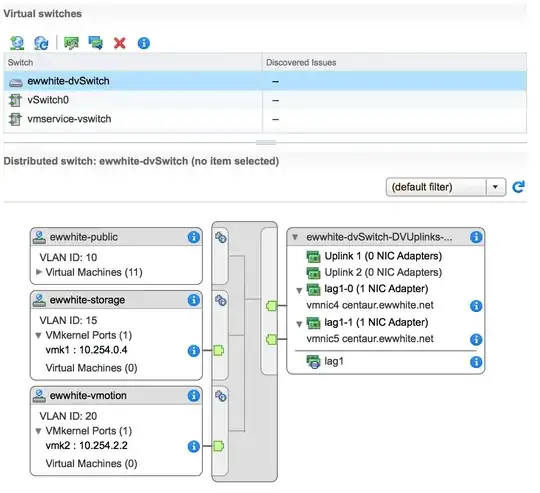

Network Setup: Here is my distributed switch setup (JPG). Here are my networks.

- 10.1.1.0/24 VM Management (VLAN 11)

- 10.1.2.0/24 Storage Network (NFS, VLAN 12)

- 10.1.3.0/24 VM vMotion (VLAN 13)

- 10.1.4.0/24 VM Fault Tolerance (VLAN 14)

- 10.2.0.0/24 VM's Network (VLAN 20)

vSphere addresses

- 10.1.1.1 node1 Management

- 10.1.1.2 node2 Management

- 10.1.2.1 node1 vmkernel (For NFS)

- 10.1.2.2 node2 vmkernel (For NFS)

- etc.

Other addresses

- 10.1.2.100 QNAP TS-669 (NFS Server)

- 10.2.0.1 Domain Controller (VM on node1)

- 10.2.0.2 vCenter (VM on node1)

I'm using a Cisco SRW2024P Layer-2 switch (Jumboframes enabled) with the following setup:

- LACP LAG1 for node1 (Ports 1 through 4) setup as VLAN trunk for VLANs 11-14,20

- LACP LAG2 for my router (Ports 5 through 8) setup as VLAN trunk for VLANs 11-14,20

- LACP LAG3 for node2 (Ports 9 through 12) setup as VLAN trunk for VLANs 11-14,20

- LACP LAG4 for the QNAP (Ports 23 and 24) setup to accept untagged traffic into VLAN 12

Each subnet is routable to another, although, connections to the NFS server from vmk1 shouldn't need it. All other traffic (vSphere Web Client, RDP etc.) goes through this setup fine. I tested the QNAP NFS server beforehand using ESX host VMs atop of a VMware Workstation setup with a dedicated physical NIC and it had no problems.

The ACL on the NFS Server share is permissive and allows all subnet ranges full access to the share.

I can ping the QNAP from node1 vmk1, the adapter that should be used to NFS:

~ # vmkping -I vmk1 10.1.2.100

PING 10.1.2.100 (10.1.2.100): 56 data bytes

64 bytes from 10.1.2.100: icmp_seq=0 ttl=64 time=0.371 ms

64 bytes from 10.1.2.100: icmp_seq=1 ttl=64 time=0.161 ms

64 bytes from 10.1.2.100: icmp_seq=2 ttl=64 time=0.241 ms

Netcat does not throw an error:

~ # nc -z 10.1.2.100 2049

Connection to 10.1.2.100 2049 port [tcp/nfs] succeeded!

The routing table of node1:

~ # esxcfg-route -l

VMkernel Routes:

Network Netmask Gateway Interface

10.1.1.0 255.255.255.0 Local Subnet vmk0

10.1.2.0 255.255.255.0 Local Subnet vmk1

10.1.3.0 255.255.255.0 Local Subnet vmk2

10.1.4.0 255.255.255.0 Local Subnet vmk3

default 0.0.0.0 10.1.1.254 vmk0

VM Kernel NIC info

~ # esxcfg-vmknic -l

Interface Port Group/DVPort IP Family IP Address Netmask Broadcast MAC Address MTU TSO MSS Enabled Type

vmk0 133 IPv4 10.1.1.1 255.255.255.0 10.1.1.255 00:50:56:66:8e:5f 1500 65535 true STATIC

vmk0 133 IPv6 fe80::250:56ff:fe66:8e5f 64 00:50:56:66:8e:5f 1500 65535 true STATIC, PREFERRED

vmk1 164 IPv4 10.1.2.1 255.255.255.0 10.1.2.255 00:50:56:68:f5:1f 1500 65535 true STATIC

vmk1 164 IPv6 fe80::250:56ff:fe68:f51f 64 00:50:56:68:f5:1f 1500 65535 true STATIC, PREFERRED

vmk2 196 IPv4 10.1.3.1 255.255.255.0 10.1.3.255 00:50:56:66:18:95 1500 65535 true STATIC

vmk2 196 IPv6 fe80::250:56ff:fe66:1895 64 00:50:56:66:18:95 1500 65535 true STATIC, PREFERRED

vmk3 228 IPv4 10.1.4.1 255.255.255.0 10.1.4.255 00:50:56:72:e6:ca 1500 65535 true STATIC

vmk3 228 IPv6 fe80::250:56ff:fe72:e6ca 64 00:50:56:72:e6:ca 1500 65535 true STATIC, PREFERRED

Things I've tried/checked:

- I'm not using DNS names to connect to the NFS server.

- Checked MTU. Set to 9000 for vmk1, dvSwitch and Cisco switch and QNAP.

- Moved QNAP onto VLAN 11 (VM Management, vmk0) and gave it an appropriate address, still had same issue. Changed back afterwards of course.

- Tried initiating the connection of NAS datastore from vSphere Client (Connected to vCenter or directly to host), vSphere Web Client and the host's ESX Shell. All resulted in the same problem.

- Tried a path name of "VM", "/VM" and "/share/VM" despite not even having a connection to server.

- I plugged in a linux system (10.1.2.123) into a switch port configured for VLAN 12 and tried mounting the NFS share 10.1.2.100:/VM, it worked successfully and I had read-write access to it

- I tried disabling the firewall on the ESX host

esxcli network firewall set --enabled false

I'm out of ideas on what to try next. The things I'm doing differently from my VMware Workstation setup is the use of LACP with a physical switch and a virtual distributed switch between the two hosts. I'm guessing the vDS is probably the source of my troubles but I don't know how to fix this problem without eliminating it.