My question is more-or-less a newer version of this 3 year old question: Small Business Network Switches/General network configuration

Summary

Everything is Gigabit and we don't have any real complaints about network performance. My real question is: for the 4 or 5 of us here, is there a next step that makes sense?

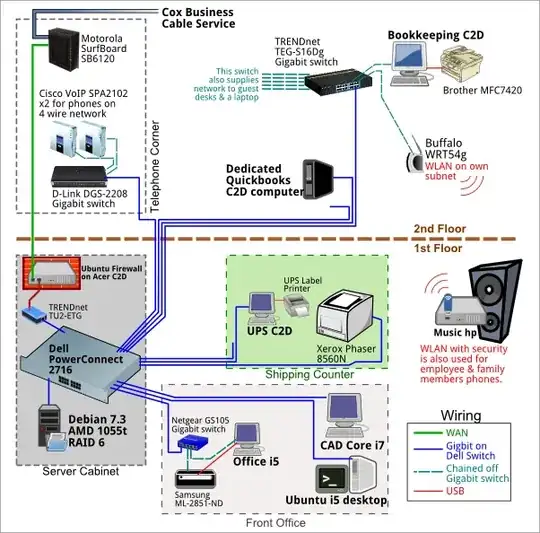

Network information

My small business's informal network centers around one central 16 port Dell switch and our file server in a star layout. Internal server is a Debian samba sharing a RocketRaid hardware RAID6 on UPS with shutdown tested and working. Whenever I do dev work, I use the file server for http or MySql apps. We have our domain email hosted by Google Apps, which we've been using since it was Beta and we love it. I like what I read on these two QAs: guidelines for wiring an office and Best Pratices for a Network File Share?.

It wasn't until I started preparing for this question two days ago that I even realized a person can log in to the Dell switch to manage it. (facepalm)

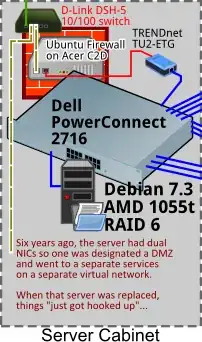

Oh and it gets better!! I wandered around the business taking pictures of everything with an ethernet cable on it. Turns out I had a some legacy haunting me! 6+ years ago, we had a server with 2 NICs & my IT helping friend talked me into putting a DMZ on the 2nd port. The switch for that trick easily is from the early 1990s: I remember getting it from the trash at a company that got bought by AOL in 1997! It's so old, you can't google it. So thanks to my recent server reinstall and reading around serverfault, I got rid of that sadness. Edit to add: haven't noticed difference at work yet, but scp files from work to home (initiated from home) is remarkably faster now with that 10/100 switch gone!

With preparation and luck we have gotten through a few failures. Right now, I'm comfortable with everything but suspect I'll be replacing the firewall and switch soon...

Questions:

Are there any easy ways we can get performance increases?

Would it make sense to get a switch with a fiber port and then put in a fiber NIC on the server? From the reading I've been doing, it seems LANs are holding steady with Gigabit and nothing really new has happened since then for the small folks.

I haven't even Google'd how to log into the Dell switch, so I'm assuming it's unmanaged. I was going to visit the switch for a webserver so I checked the DHCP server (on firewall box) and the switch doesn't show up among the clients. I've only scratched the surface of reading up on all that: should the switch and RAID server be using large packets or something?

Network load is normally pretty mellow until the Office computer is working on our videos. Right now, they cannot be served live from the RAID and are copied back and forth. I do all my CAD from the RAID but it uses a local scratch pad and saves of 40M+ take 10 or so seconds.

Illustrations were made using Inkscape. I tried a few of the network diagramming tools and it was easier to just draw everything by hand. SVGs available upon request

Edit for Update

I was working in the rack, moved the firewall Acer computer, & it's HDD died. Disappeared from bios, so is probably the controller. Yes, literally, touching the computer to move it from the back shelf to the front shelf killed it. For now, the Buffalo WHR-HP-G54 got reconfigured & pressed into firewall duty until the already ordered dual-NIC new firewall box shows up. SCPing from home seems a tad slower than the old firewall with the USB->eth adapter. I Google'd and found out that it's WAN port is 10/100.

The observations made:

1) The legacy 10/100 link from firewall Acer to the cable modem is slower than when the link from firewall to modem is Gigabit.

2) The Buffalo WHR-HP-G54 WAN port's 10/100 link to modem is slower than when all Gigabit.

3) The TU2-ETG's USB2.0 link is faster than 10/100.

4) Cox Biz cable upload is faster than 10/100.

Once the new switch shows up (with profiling), I'm going to review Evan's and Chris's answers, attempt Evan's suggested tests, and then choose between them for "The Accept".

Final result

Getting to "the answer" of this question has been an amazing three week journey. Thank you both Chris and Evan: it was tough to choose whose answer to accept.

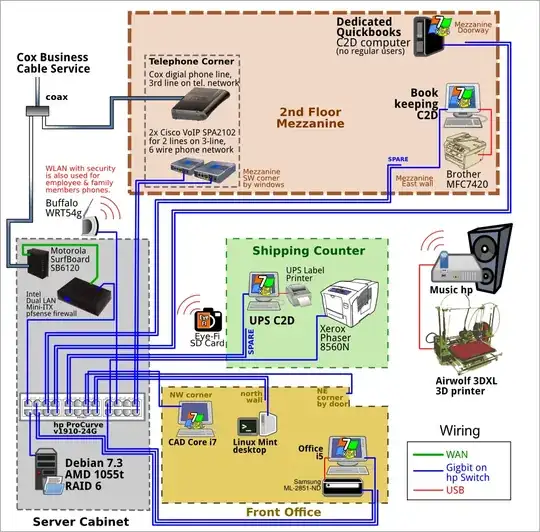

Seeing how inexpensive a better switch cost, I bought a hp ProCurve V1910-24G. It cost less than the lesser Dell did 5 years ago. That it is shiney is only half why I bought it; the Dell is at least 6 years old and while still performing well, I'm going to have to set a rule about retiring hardware that's more than five years old.

Having said that, the ProCurve has spawned a new question (I would appreciate some thought about the functions of what I mention here) but I'm super happy to have eliminated all of the desktop switches. That sounds like another rule, maybe those go in the gun safe?

Below is the revised drawing. Of note is that I moved the Cox Cable coax "T" and the cable modem now resides in the rack. The two CAT5 cables going to the telco corner are now dedicated to feeding the Cisco VoIP boxes, so the telco corner now only serves telephones. Also, the cabling in the drawing reflects the physical reality of the infrastructure, including switch ports. Most cables are paired up and the newest "drop" I created to get rid of the office switch has the three CAT6 cables going to it.

For the first time ever, the cabling associated with my switch and the firewall/router is something I'm happy with! Bottom left is the cable modem, top right is the pfsense mini-ITX firewall/router:

The switch is set back some: I actually didn't like it mounted "flush" in the rack, so I fabbed up some adapters to set the switch back about 10" from the front of the rack. The hp/Compaq server cabinet has the additional rails so I took advantage of those. The cabinet has freedom to roll forward enough to allow access to the back doors. Wifi AP is resting on top of the cabinet as is excess coiled up network cable.

The yellow ethernet cabling is CAT6 I bought from StarTech on close-out for $7 for 75 foot cross-over cable & has the plenum. That was such a bargain, I bought a couple dozen and am very good at fitting jacks. (+ have the T568B wire color order memorized)

This setup is noticeably faster than before! When I ssh -X from home and run a browser window from the server at work is faster than I remember 14.4k modems, so seems about 3x as fast as when I'd log in and need the web as surfed from within the LAN. At work, opening files is as fast as if the drive wasn't networked. If I already have photoshop cs6 running, opening a 6M jpeg from the raid is instantaneous.

Additionally, I realized the cable from the raid to the switch was one of those CAT5 cables that comes with wireless routers, etc, so I replaced it with a 2' CAT6 cable and could tell a before / after performance boost with my photoshop experiment. Now there's all CAT6 from cable modem to firewall to switch to server. My desk has CAT5 from the switch for now, but I'll upgrade whenever I open up a wall.

Once settled in and I'm caught up with regular work, I'll try my hand at benchmarking the network's performance. Right now, I'm pretty sure it can't get much better than applying the best of practice advice of getting rid of switches and unnecessary hardware. The hardware raid controller is 6+ years old, so getting a new one is on the horizon. Once that happens, this one will fall back to archival duty.