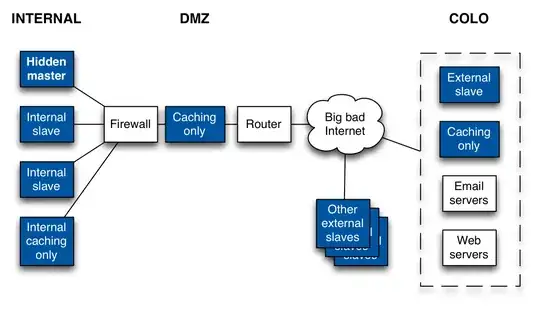

Here are my questions:

1) Is there anything obviously broken about this design?

Nothing is Obviously wrong. .. At least that I can see.

2) Are there any missing or extraneous elements here?

Missing: Are you comfortable not having a hot stand by for your hidden master? The system seems quite engineered (I don't want to call it over engineered without seeing your use case) to rely on a single primary host. It's outside the scope of your diagram, but do your have a contingency plan for when [not if] the primary master blows up?

Extraneous: Keep in mind that every dns server that you add to the mix is another server that must be managed. Given your usage, is it critical to have this many DNS servers?

3) OK to run the hidden master on the same subnet as the internal slave

servers?

I would expect the hidden master, and authoritative dns slaves to be in the dmz. Lock down the master appropriately. The internal slaves are answering authoritative look ups for your zone from the internet correct? If the internal slaves only answer queries for your zone from internal hosts, you either need a HUGE zone, a silly number of internal lookups to your internal zone (consider caching DNS servers at the host/workstation level), or you have given too much horse power to internal DNS. If they are answering queries from the internet, I would expect them to be in the DMZ. You are free to label them how you want.

As far as the master being on the same subnet as the slaves - Lock it down. Should not be an issue (and will save you some routing overhead come zone xfer time).

4) Given relatively light DNS traffic (< 1 Mbps) on the internal and DMZ

networks, are there security issues to running the caching-only

servers in jails (BSD-speak for VMs) on the authoritative servers? Or

should they be on dedicated machines?

Yes. There are always security issues. If the internal caching only servers are locked down to accept only traffic from internal sources, they are placed in jails, on a presumably BSD environment, and updated and monitored regularly... A hacker has a lot of work to do to exploit the environment.

Your biggest risk (See: not a professional risk analyst) is likely the chance of a hacker, by a stroke of shear miracle, is the possibility of having one of your authoritative DNS slaves get hijacked. Likely to result in partial defacement, or if the attacker is truly brilliant, some 'poisoning' and information theft (See: SSL/TLS to put a halter on that).

Next biggest (See: not a professional risk analyst) is the corruption of the slave OS requiring re-install/restore.

Ultimately:

It's a fairly solid design, and without a view into the network (which you'll not be expected to provide us), it is quite hard to find shortcomings / faults with the design. The only thing that clearly stands out is that: there are a lot of pieces, a complex setup, and a lot of engineering here... Make sure that there is a business for it.

Ex: You could run Bind9 as an Authoritative Slave, that does recursive/forwarding lookups, and caching all in one daemon. (and saves multihoming / port forwarding / other networking magic to get two DNS daemons answering on the same box).