I know this has been discussed in several places, but there doesn't seem to be a definitive answer yet - at least not for RHEL 6. I'm just hoping someone might be able to point to how it's working so I can dig a little.

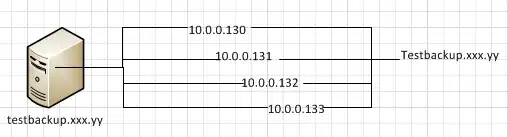

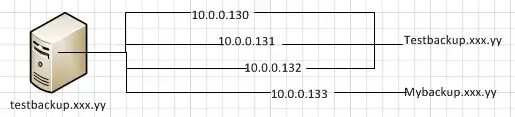

Short Version: I have an OpenVZ Host Node with a public IP address which is acting as a reverse proxy to a bunch of OpenVZ containers with private IP addresses. I've created 2 containers with the same name (if you want to know why, skip to the long version below), and the HN also has these entries (amongst others) in its /etc/hosts:

# Generated by make_clone.sh

10.0.0.130 testbackup.xxx.yy

10.0.0.131 testbackup.xxx.yy

I can use OpenVZ to suspend / resume either of these hosts by ID, and the reverse proxy seems to magically route requests on to whichever host is running (e.g. to either IP address 10.0.0.130 or 10.0.0.131. But I can't for the life of me find out which bit of software is doing this. Is it Apache? Is it something in the networking system of the HN? Something else? It seems to work, but I'd like to know more about why/how, as there also seem to be big differences of opinion as to whether it should work at all. To be clear, I'm not looking for round-robin or load balancing here. Just simple, manual switchover from one OpenVZ container to another.

Lomg Version: Whilst setting up some scripts to create and manage a series of OpenVZ containers, I created a script called make_clone.sh which takes a template and creates a new container. The script takes 2 parameters - the container ID and desired hostname. One of the things it does is allocate a new 10.0.0.* IP address for the container and configure some networking, of which one element is putting an entry into the Host Node's /etc/hosts file.

Whilst testing some backup/restore scripts for these containers, I wanted to 'pretend' a particular container had died, spin up another with the same name and restore the backup. Rather than actually delete the original container, I just used vzctl stop 130 to take it offline. I then created a new container with ID 131, but with the same name. Once it was up, I restored the MySQL database and checked (via a browser) that I could access it - it's running Joomla with some customisations - and all was well.

But then I noticed that in the Host Node's /etc/hosts, I had these 2 entries (amongst others):

# Generated by make_clone.sh

10.0.0.130 testbackup.xxx.yy

10.0.0.131 testbackup.xxx.yy

The Host Node is also acting as a reverse proxy. Only the Host Node has an external IP address, and its Apache configuration effectively maps subdomains onto Containers. So, as well as the entries above in /etc/hosts, it also has sections like this in the httpd config:

` ServerName testbackup.xxx.yy

ProxyRequests Off

<Proxy *>

Order deny,allow

Allow from all

</Proxy>

ProxyPass /server-status !

ProxyPass / http://testbackup.xxx.yy/

ProxyPassReverse / http://testbackup.xxx.yy/

<Location />

Order allow,deny

Allow from all

</Location>

`

In the scenario I'm describing, it would actually end up with 2 of these sections due to the scripts, but they would be identical - it refers to the Container by hostname not IP, which makes me think it's not Apache per se that's picking the 'working' Container. I can now browse to http://testbackup.xxx.yy/ (using the real domain name, obviously) and Apache seems happy to route the requests on to whichever of 10.0.0.130 or 10.0.0.131 is up. I can switch between them by simply suspending / resuming the OpenVZ containers.

I wasn't expecting this to work - but it's nice that it does. My question is, should it? Can it be relied on? Or is it just a fluke that will stop working when whatever bit of fluff that's got into some other configuration file somewhere gets tidied up?