Yes, I still use multiple partitions on virtual machines and mountpoints for monitoring, security and maintenance requirements.

I'm not a fan of single or limited mountpoint virtual machines (unless they're throwaway machines). I treat VMs the same way I treat physical servers. Aligning partitions with some of the Linux Filesystem Hierarchy Standard still makes sense in terms of logical separation of executables, data partitions, temp and log storage. This also eases system repair. This is especially true with virtual machines and servers derived from a template.

(BTW, I don't like LVM on virtual machines either... Plan better!!)

In my systems, I try to do the following:

/ is typically small and does not grow much. /boot is predictable in size and the growth is controlled by the frequency of kernel updates. /tmp is application and environment dependent, but can be sized appropriately. Monitoring it separately helps meter abnormal behavior and protects the rest of the system./usr Should be predictable, containing executables, etc./var grows, but the amount of data churn can be smaller. Nice to be able to meter it separately.- And a growth partition. In this case, it's

/data, but if this were a database system, it may be /var/lib/mysql or /var/lib/pgsql... Note that it's a different block device, /dev/sdb. This is simply another VMDK on this virtual machine, so it can be resized independently of the VMDK containing the real OS partitions.

# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/sda2 12G 2.5G 8.8G 23% /

tmpfs 7.8G 0 7.8G 0% /dev/shm

/dev/sda1 291M 131M 145M 48% /boot

/dev/sda7 2.0G 68M 1.9G 4% /tmp

/dev/sda3 9.9G 3.5G 5.9G 38% /usr

/dev/sda6 6.0G 892M 4.8G 16% /var

/dev/sdb1 360G 271G 90G 76% /data

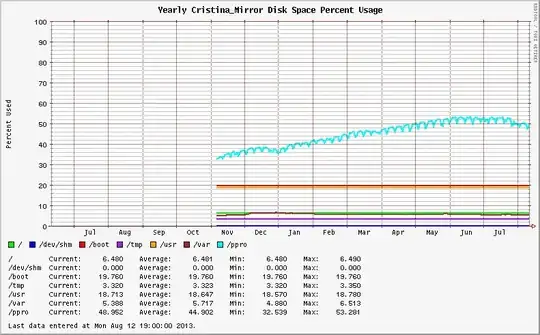

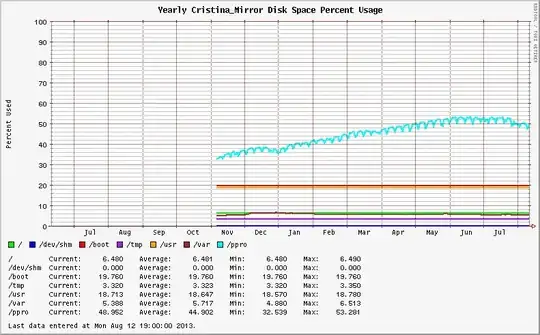

Separation of some of these partitions makes it far easier to identify trends and detect anomalous behavior; e.g. 4GB core dumps in /var, a process that exhausts /tmp,

Normal

Abnormal. The sudden rise in /var would not have been easy to detect if one large / partition were used.

Recently, I've had to apply a cocktail of filesystem mount parameters and attributes (nodev,nosuid,noexec,noatime,nobarrier) for a security-hardened VM template. The partitioning was an absolute requirement for this because some partitions required specific settings that could not be applied globally. Another data point.