I would recommend you post this over on the Network Engineering Stack exchange site. However, I believe the following answers to be solid answers.

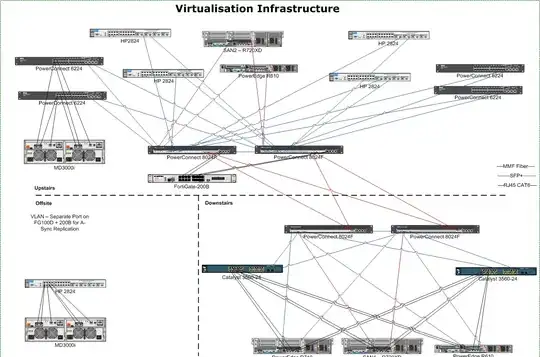

1) LAG is not what most people thinks it is. It increases the redundancy and capacity of an interconnect, not of the connection itself. If you have two 1Gb links, your total throughput capacity is 2Gb/sec, but the most any single transfer will use is 1Gb/s. But it allows you to have two simultaneous 1Gb/sec transfers running. That being said, I see no reason to LAG your 8024 backone (storage or network traffic?) switches, unless you have unstated requirements. You already are setup for redundancy and MPIO. I would disable STP on the ports between the switches and the SAN and Hosts. On Cisco I'd set the ports to "switchport mode access" and "spanning-tree portfast". I don't know what the PowerConnect equivalent is. If you are concerned about redundancy, ensure that each switch has A & B power supplies and that you have independent A & B power circuits that the corresponding switches are plugged into.

2) For iSCSI, VMWare has a white paper and setup guide here: http://www.vmware.com/files/pdf/techpaper/vmware-multipathing-configuration-software-iSCSI-port-binding.pdf This document is very straight forward. For VM network traffic, this depends on your needs. As it is, I would not configure any kind of LACP/LAG between the VMHOSTS and switches. Per the VMWare Networking Best Practices recommendation here:VMWare Networking Best Practices.PDF; I would team 2 nics per vswitch (set to trunk 802.1q w/no spanning-tree, split across the two ciscos) and use Active/Active teaming based on originating VM port-id on the vmhost or alternatively put all 4 nics in one vswitch in Active/Active and put 1 port from each nic card on each switch. TLDR: Read the VMWare network best practices and design LAN switching to your requirements. Nothing special needs to be done in regards to the individual nics for iSCSI; configure MPIO via VMWare guide. PAY ATTENTION TO STP SETTINGS FOR YOUR VLANS

3) This depends. For iSCSI traffic, if you have MPIO enabled, then yes. Theoretically you could lose 1 switch upstairs and 1 switch downstairs and keep running, but in a degraded capacity. For the VM network traffic, it depends on how you have the VMWare vswitches configured and your VLAN/STP environment. But properly configured, then yes, you could lose 1 Cisco and 1 8024 switch downstairs and continue running in a degraded capacity.

That being said, your gotchas here will be your vSwtich configuration and ensuring your VLAN and STP settings are correct. Good luck!