We are going to roll out tuned (and numad) on ~1000 servers, the majority of them being VMware servers either on NetApp or 3Par storage.

According to RedHats documentation we should choose the virtual-guestprofile.

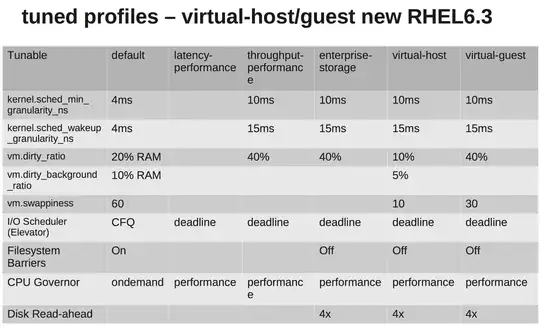

What it is doing can be seen here: tuned.conf

We are changing the IO scheduler to NOOP as both VMware and the NetApp/3Par should do sufficient scheduling for us.

However, after investigating a bit I am not sure why they are increasing vm.dirty_ratio and kernel.sched_min_granularity_ns.

As far as I have understood increasing increasing vm.dirty_ratio to 40% will mean that for a server with 20GB ram, 8GB can be dirty at any given time unless vm.dirty_writeback_centisecsis hit first. And while flushing these 8GB all IO for the application will be blocked until the dirty pages are freed.

Increasing the dirty_ratio would probably mean higher write performance at peaks as we now have a larger cache, but then again when the cache fills IO will be blocked for a considerably longer time (Several seconds).

The other is why they are increasing the sched_min_granularity_ns.

If I understand it correctly increasing this value will decrease the number of time slices per epoch(sched_latency_ns) meaning that running tasks will get more time to finish their work. I can understand this being a very good thing for applications with very few threads, but for eg. apache or other processes with a lot of threads would this not be counter-productive?