Edit: I thought this topic was done with months ago, but it has just been revived and now OP is asking for more 'real facts, quoted studies,' etc., so I figured what the heck.

Exploits of this nature are:

- Rare

- Sensitive in nature and therefore not shared openly, and when they are, the exploits would be patched by the vendor before anyone on this site ever knew about them

- Complicated and will vary by vendor

We can't say it's impossible to hack a hypervisor and gain access to other VMs. Nor can we quantify how much risk there is, except for that experience shows us that it's pretty low, considering that you will not find many stories of attacks that utilized hypervisor exploits.

Here's a sort-of interesting article to the contrary that suggests that more than a few hypervisor-based attacks have been carried out.

However, with technology depending on hypervisors now more than ever, such exploits would be patched and guarded against with more urgency than almost any other type of exploit.

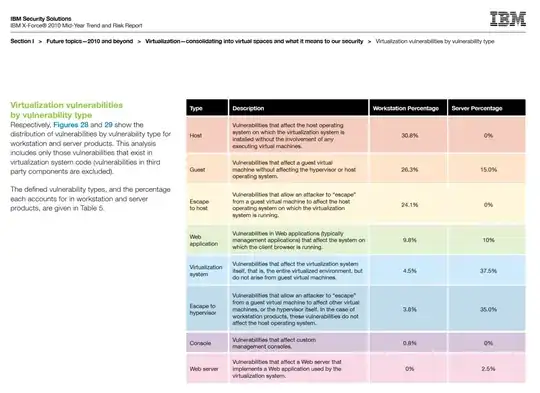

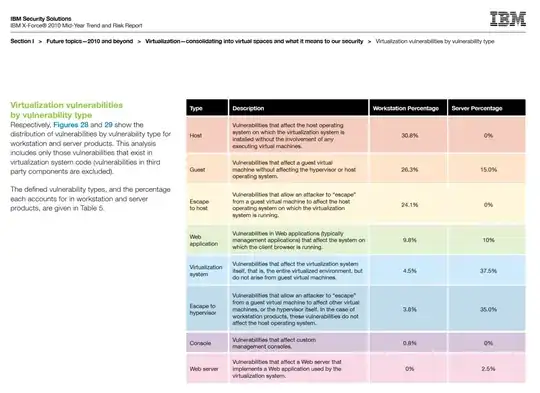

Here is an excerpt from the IBM X-Force 2010 Mid-Year Trend and Risk Report:

(Please open this image in a new tab to view it full-size.)

Notice the measured percentage of "Escape to hypervisor" vulnerabilities, which sounds pretty scary to me. Naturally you'd want to read the rest of the report as there is a lot more data in it to back up the claims.

Here is a story about a possible exploit carried out on the Playstation 3 hypervisor, which is amusing. Maybe not as impactful to your business, unless your business is Sony, in which case it's extremely impactful.

Here is a wonderful article from Eric Horschman of VMware, in which he kind of comes off to me sounding like a teenager going full anti-Micro$oft, but it's still a good article. In this article, you'll find tidbits such as this:

The residents at Microsoft’s glass house had some other stones to toss

our way. Microsoft pointed to CVE-2009-1244 as an example of a guest

breakout vulnerability in ESX and ESXi. A guest breakout exploit is

serious business, but, once again, Microsoft is misrepresenting the

facts. VMware responded quickly to patch that vulnerability in our

products, and ESX was much less affected than Microsoft would lead you

to believe:

Quibbling amongst competitors. But probably the most lucid thing he says in the entire article is this:

The truth is, vulnerabilities and exploits will never completely go

away for any enterprise software.