I have a very strange IO issue with Ubuntu 12.04 and MySQL.

Currently the machine is only a replicated slave with an occasional read query hitting it. The disk utilization spikes randomly and seemingly unrelated to MySQL usage. The machine is only running MySQL and has no other services.

Originally the machine was using ext4 which suffers from IO issues with MySQL, I wiped it and reinstalled with ext3. After replication resumed the disk utilization again randomly spiked, remained high for a few hours and dropped off again.

The MySQL usage follows the same pattern every day but the disk utilization has no pattern, it spikes randomly and can remain high for a number of hours or just a few minutes. There is a nightly spike at 1am, this is when our MySQL backup(mysqldump) runs and is consistent.

My next step is to downgrade to Ubuntu 10.04, the machine was running Debian 5 previously without any issues. We have a second identical machine with the same issue which rules out a single hardware issue in my mind.

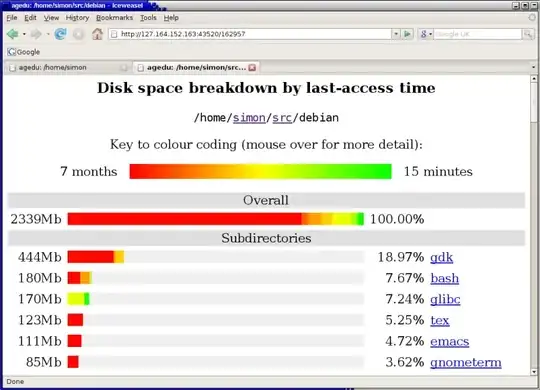

Disk Utilization Graph:

The initial spike at 5pm is replication catching up after reinstall, the spike at 1am is our backup. The issues pops up at 4am and remains high until just after 12 where it drops of dramatically.

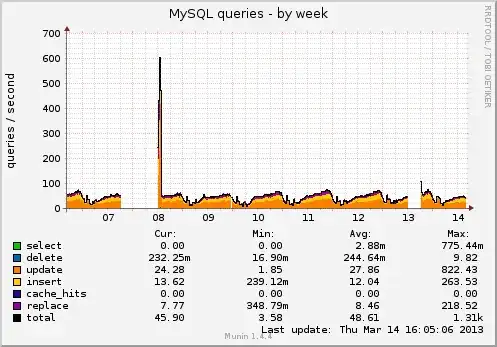

MySQL Weekly Graph

This is our average MySQL usage over the week. Same pattern every day, busiest from 9am to 11pm and quiet from here to 9am again with the lowest point each day around 4am.

Iostat output while the issue is happening:

/proc/mounts:

df -h: