We have a dataload job that moves a relatively large amount of data across the network between two sql servers. the servers are on the same subnet and there is only a switch between them. the data consists of several large varchar fields plus an xml field.

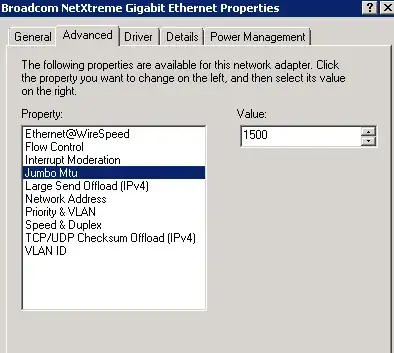

In order to increase throughput. I have tried changing the network packet size from the default 4096 to 32627 on the connection string; However it doesn't seem to be helping performance. I suspect the issue is that although we a running gig ethernet, "jumbo frames" are not enabled.

To confirm this, I tried two ping tests:

ping -l 1400 -f pdbsql01dul

Works

ping -l 4096 -f pdbsql01dul

Packet needs to be fragmented but DF set.

as you can see the largest packet size appears to be around 1400

My question is, if Jumbo frames are ~8096, is there any benefit of setting the network packet size larger than that?

Does this change if the connection is local to the server in question?