first time on ServerFault, and I've got a nice little conundrum.

Since a few months now, we've been having issues with our internet connectivity.

Environment:

Servers: 2 Terminal Servers as an RDSFarm running Windows Server 2008 R2

Browser: Internet Explorer 9

Test/debug browser: Chrome

AntiVirus: Avast 7.0.1455

Problem:

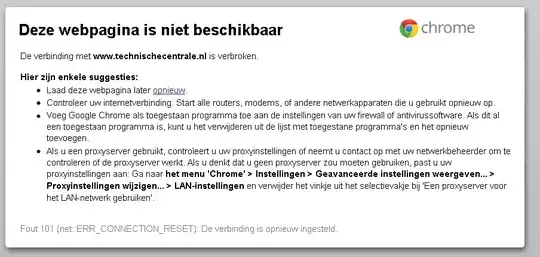

At irregular intervals, websites refuse to load, giving an error saying the page was not accessible, or some images don't load completely. Also, after inspection serveral .js files fail to get loaded.

Findings & What we tried:

First impression:

When I use Chrome during that interval, the site returns an net:: Error 101 or Error 103 after some refreshes. At other times, if it isn't giving the error, several images aren't visible and display an X image. IE just says the page cannot be displayed.

Using Chrome Developer Tools:

It shows in the console that several resources are unavailable, but when I right-click the missing images and select "Show Picture", they show. When I open up the pictures via direct URL, they also show.

Audit via Chrome Developer Tools:

I ran an audit on a page when it was in it's buggy state, and found out some .js files didn't load along with some .png, .jpg and .gif files. Different images load for Chrome and IE.

Obfuscated JS Files & Avast:

After checking that out, I found out that most of those .js files are obfuscated JS files, and since we're running Avast 7.0.1455, I was wondering if the Web Shield didn't mess things up.

Then again, it's only happening on the first TS, not the second.

So I turned off WebShield for a day, and see if anything improved. It didn't. Back to square one.

No cache expiration on files:

Several of those files that aren't being loaded were indicated not having a cache expiration.

Caching:

One of our Sysadmins changed the IE cache size to 10MB a while back, which I thought may have been the source of the problem. He changed it back to 65MB or so, but still people run into trouble with their images. It also still happens on 1 TS, and also in Chrome, so I don't think the Group Policy dictating that cache would affect Chrome, would it?

Network Issue: I also thought it might be a network or routing issue, but both the TS-servers are on the same teamed NIC, and the other one is working just fine.

Help!

If anyone has some tips on where to look for issues, or needs more info, please help me out. This has been bothering me for serveral weeks now.

EDIT & UPDATE

The problem still persists, and only on our 2 Terminal Servers.

Here's what me and a colleague did so far:

Turn off the Antivirus for a day on one server, to see if it didn't happen. Problem still occured.

Checked the MTU-size

It's the default setting (forgot the exact value :P) Problem still occured.Installed Windows Updates, IE10 Problem still occured.

Checked if there were any proxies.

The AV puts in a proxy as a so-called WebShield. We disabled the service and the program on one server for a day. Problem still occured.Reinstalled the NIC-team as it was getting messed up. (Also reinstalled the NIC drivers) Problem still occured.

Checked Group Policies Apparently in both Terminal Servers, there was a Local Machine Policy that enabled Preference Mode in IE, which had some weird customisation done. Disabled that, and... Problem still occured.

It's now even gone so far as that people are having problems uploading and downloading files from SharePoint, and a lot of sites we're using aren't working due to this.

Hunches

It's either to do with the WebShield that breaks connection when it finds something peculiar, but then it shouldn't happen when the AV is turned off.

It could be that redirects are messed up somehow, or there' something with the cache. Strange though that the same issue occurs in Chrome as well as IE9 and IE10.

If anyone has any ideas, It'd be greatly appreciated.

Thanks go out to HopelessN00b for helping me out!

UPDATE:

We are getting some errors in Event Viewer like this on one of our original TS':

Error: (04/04/2013 08:44:42 AM) (Source: Application Error) (User: )

Description: Faulting application name: iexplore.exe, version: 9.0.8112.16470, time stamp: 0x510c8801

Faulting module name: MSHTML.dll, version: 9.0.8112.16470, time stamp: 0x510c9046

Exception code: 0xc0000005

Fault offset: 0x002d0174

Faulting process id: 0x21728

Faulting application start time: 0xiexplore.exe0

Faulting application path: iexplore.exe1

Faulting module path: iexplore.exe2

Report Id: iexplore.exe3

And sometimes this pops up, but apparently that's cos of some WYSE terminals being too old (replacing them with Raspberry Pi's soon hopefully).

Error: (04/04/2013 11:21:46 AM) (Source: TermDD) (User: )

Description: The Terminal Server security layer detected an error in the protocol stream and has disconnected the client.

Client IP: [IP REDACTED].

Hope this helps.