I'm serving my website using Nginx as web server.

I offer upload functionality to my users (they are allowed to submit pictures up to 5Mb) so I have the directive: client_max_body_size 5M; in my server config.

What I've noticed is that if I try to upload any file the webserver does not prevent the upload of bigger files. Just as example, suppose I try to upload a really big video (a movie) of 700Mb. The server does not reject the upload instantaneously but it buffers the whole data (taking so long and slowing down the server) and only at the end of the upload it returns a 413 Request entity too large error.

So the question is: is there any way to properly configure Nginx to block big files upload when the transmitted data starts to overcome my client_max_body_size limit?

I think it would be really insecure to go on production with my actual settings and I wasn't able to find anything helpful on google.

Edit:

I'm using php and Symfony2 as backend...

Edit (again):

This is what appears in my error.log:

2013/01/28 11:14:11 [error] 11328#0: *37 client intended to send too large body: 725207449 bytes, client: 33.33.33.1, server: www.local.example.com, request: "POST /app_dev.php/api/image/add/byuploader HTTP/1.1", host: "local.example.com", referrer: "http://local.example.com/app_dev.php/"`

The weird things is that i am monitoring my nginx error.log with tail -f error.log and the message appears immediately when the upload starts (before it ends). So nginx makes some kind of preventive check, but it doesn't stop/chunk the upload request...

I also tried to verify if php gets the control of the upload by issuing a echo 'something'; die(); on the page who handles the upload but it didn't printed out anything and didn't stopped the upload request. So it should be nginx or some php internals that make the whole upload continue until it's completely transmitted...

another edit:

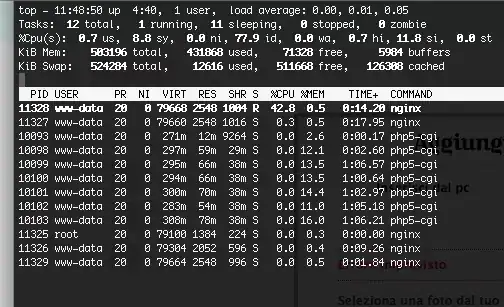

here's a view of my top (monitoring nginx and php) when a big file upload is in progress (nginx gets overloaded):

edit: here's my nginx configuration

server {

listen 80;

server_name www.local.example.com local.example.com;

access_log /vagrant/logs/example.com/access.log;

error_log /vagrant/logs/example.com/error.log;

root /vagrant/example.com/web;

index app.php;

client_max_body_size 6M;

location /phpmyadmin {

root /usr/share;

index index.php;

location ~* \.php {

fastcgi_intercept_errors on;

fastcgi_pass 127.0.0.1:9000;

fastcgi_split_path_info ^(.+\.php)(/.*)$;

include fastcgi_params;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

fastcgi_param HTTPS off;

}

}

location / {

try_files $uri @rewriteapp;

}

location @rewriteapp {

rewrite ^(.*)$ /app.php/$1 last;

}

# pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000

location ~ ^/(app|app_dev)\.php(/|$) {

fastcgi_intercept_errors on;

fastcgi_pass 127.0.0.1:9000;

fastcgi_split_path_info ^(.+\.php)(/.*)$;

include fastcgi_params;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

fastcgi_param HTTPS off;

}

}