Postgres can scale up to as many processors as you want to install, and your OS can handle/manage effectively. You can install Postgres on a 128 core machine (or even a machine with 128 physical processors) and it will work fine. It may even work better than on a 64 core machine if the OS scheduler can handle that many cores.

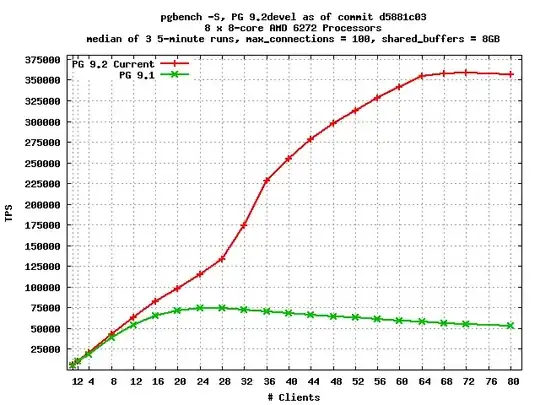

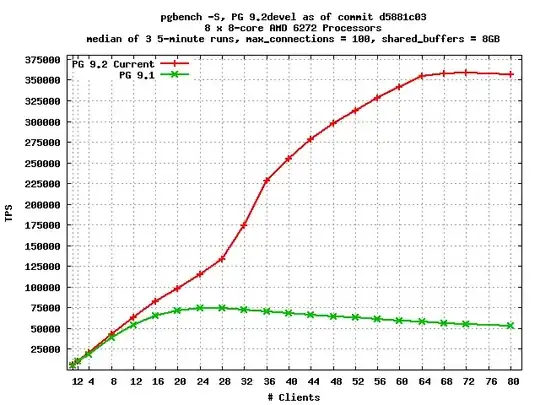

Postgres has been shown to scale linearly up to 64 cores (with caveats: We're talking about read performance, in a specific configuration (disk, RAM, OS, etc.) -- Robert Haas has a blog article with a nice graph which I've reproduced below:

What's important about this graph?

The relationship is linear (or nearly so) as long as the Number of Clients is less than or equal to the Number of Cores, and then begins what looks to be roughly a log-linear decrease in performance as you have more client connections than you do cores to run Postgres backends on because the backends start fighting for the CPU (load average goes above 1.0, etc...).

While it has only been demonstrated for up to 64 cores, you can generalize that you can keep adding cores (and clients) and keep improving performance, up to the limit of some other subsystem (disk, memory, network) where processes are no longer having CPU contention problems but are instead waiting on something else.

(Haas also has another article where they proved linear scalability to 32 cores which has some great reference material on scalability in general -- highly recommended background reading!)