Time for some science, bitches. The test setup:

- Windows 7 x64

- 2Gb RAM

- Virtual Machine on ESXi 5.0

- LUN 1: 5Gb Thick Provisioned on 2x HP P4000 Lefthand Cluster 1 exposed via iSCSI (2x 1Gb MPIO)

- LUN 2: 5Gb Thick Provisioned on 2x HP P4000 Lefthand Cluster 2 exposed via iSCSI (2x 1Gb MPIO)

We have a total of two LUNs on two seperate clusters. I have artifically limited the maximum throughput on these LUNs so that I don't impact our real systems that are running on the arrays, but for the purpose of comparing the outputs, that should be enough.

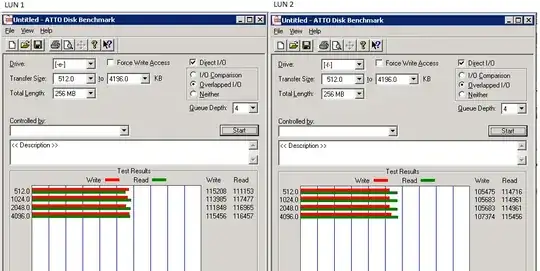

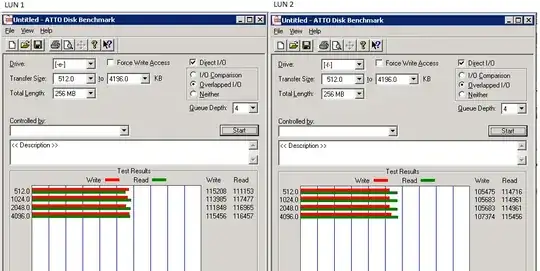

Step 1: Benchmark the LUNs individually

Created two individual simple volumes, formatted with NTFS 4Kb blocks.

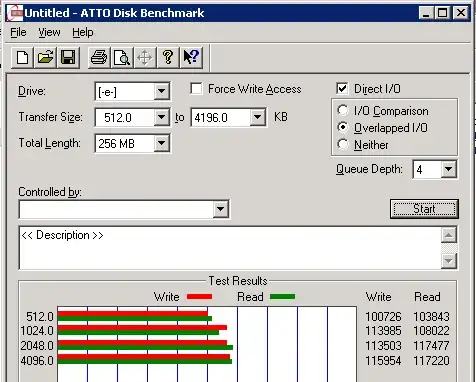

Atto Disk Benchmark, from 512 to 4196Kb:

Both LUN1 and LUN2 consistantly average a maximum 1Gbps of throughput (LUN2 is every so slightly slower as it runs SATA disks rather than SAS disks).

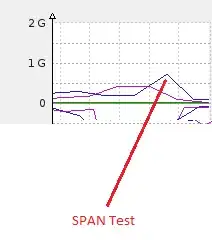

If I look at the data collected from each SAN cluster itself, we see a similar story:

Both LUNs output approx 1Gbps of traffic during each test.

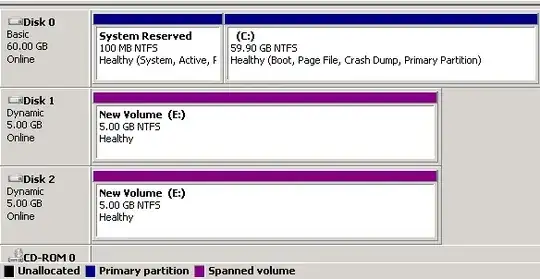

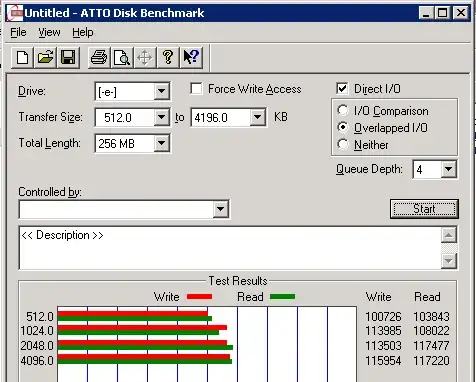

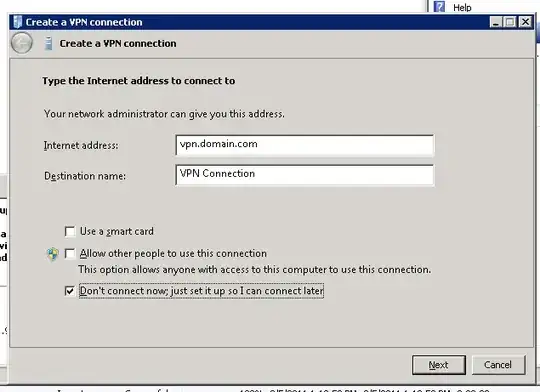

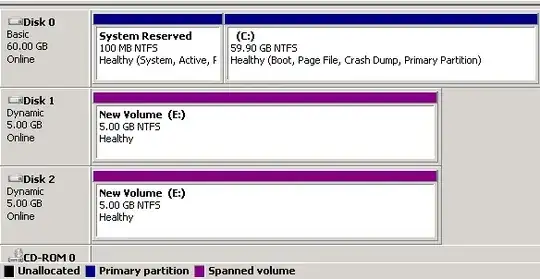

Step 2: Benchmark the LUN as a spanned volume

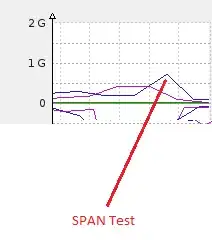

OK; so far everything is as we expected. Now we convert those two disks to dynamic disks and create a single spanned 10Gb drive and run the same benchmark:

And what do you know, an ever so slight drop in performance, but all in all we can call that identical to the first two tests. But, most importantly, looking at the data collected from the SAN, only one LUN was ever active:

One would assume that the 2nd LUN would only come active once the first LUN is full. Hence span.

Step 3: For shits and giggles

I have limited the bandwidth here so that I don't impact our live systems, but I suggest you do all of this again on your own servers to see what kind of performance you get. If it's not enough, then I suggest trying a striped set. Normally I would never ever suggest this as if you lose a LUN you're screwed, but if you're comfortable that your SAN provider can keep both LUNs online (in this example, each LUN is a fault tolerant cluster, so the chances of it going offline are slim) then you might want to try striping and benchmarking to see if you get the performance you require. And let's be honest, striping or spanning, you lose one disk you lose the lot anyway. So the risk factor is pretty high either way.

That's all for now; let me go and clear all the network alerts that have been triggered because a single initiator is consuming more than its fair share of bandwidth...