I've hit a bit of a wall with our network scale-out. As it stands right now:

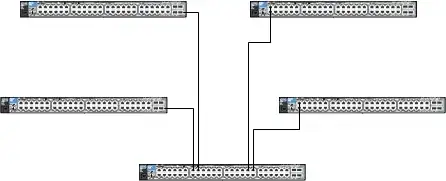

We have five ProCurve 2910al switches connected as above, but with 10GbE connections (two CX4, two fiber). This fully populates the central switch above, there will be no more 10GbE Ethernet connections from that device. This group of switches is not stacked (no stack directive).

Sometime in the next two or three months I'll need to add a sixth, and I'm not sure how deep of a hole I'm in. Ideally I'd replace the core switch with something more capable and has more 10GbE ports. However, that's a major outage and that requires special scheduling.

The two edge switches connected via fiber have dual-port 10GbE cards in them, so I could physically put another switch on the far end of one of those. I don't know how much of a good or bad idea that would be though.

Is that too many segments between end-points?

Some config-excerpts:

Running configuration:

; J9147A Configuration Editor; Created on release #W.14.49

hostname "REDACTED-SW01"

time timezone 120

module 1 type J9147A

module 2 type J9008A

module 3 type J9149A

no stack

trunk B1 Trk3 Trunk

trunk B2 Trk4 Trunk

trunk A1 Trk11 Trunk

trunk A2 Trk12 Trunk

vlan 15

name "VM-MGMT"

untagged Trk2,Trk5,Trk7

ip helper-address 10.1.10.4

ip address 10.1.11.1 255.255.255.0

tagged 37-40,Trk3-Trk4,Trk11-Trk12

jumbo

ip proxy-arp

exit