It has happened to me already twice within very few days that my server goes down completely, meaning http, ssh, ftp, dns, smtp, basically ALL services stop responding, as if the server had been turned off, except it still responds to ping, which is what most buffles me.

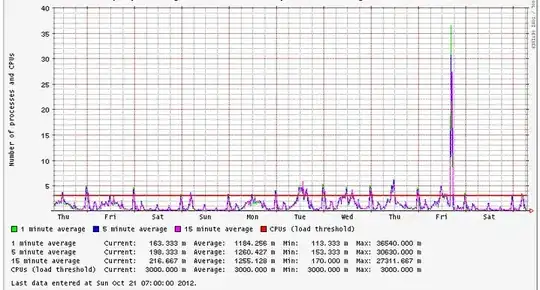

I do have some php scripts that cause a huge load (cpu and memory) on the server in short bursts, used by a little group of users, but usually the server "survives" perfectly well to these bursts, and when it goes down it never coincide with such peaks in usage (I'm not saying it can't be related, but it doesn't happen just after those).

I'm not asking you to magically be able to tell me the ultimate cause of these crashes, my question is: is there a single process whose death may cause all these services to go down simultaneously? The funny thing is that all network services go down, except ping. If the server had 100% of the CPU eaten up by some process, it wouldn't respond to ping either. If apache crashed because of (for example) a broken php script, that would affect http only, not ssh and dns.... etc.

My OS is Cent OS 5.6

Most importantly, after hard-rebooting the server, what system logs should I look at? /var/log/messages doesn't reveal anything suspicious.