The rmem_max Linux setting defines the size of the buffer that receives UDP packets.

When traffic becomes too busy, packet loss starts occurring.

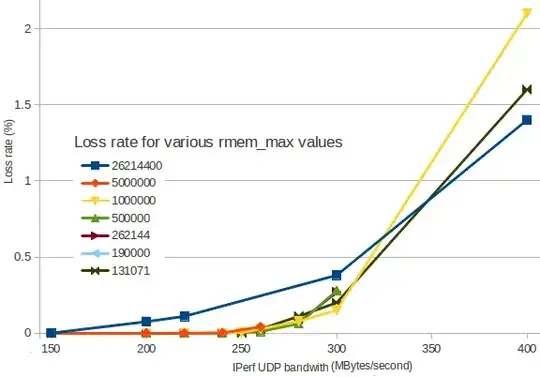

I made a graph showing how packet loss increases depending on the incoming bandwidth.

(I use IPerf to generate UDP traffic between two VM instances).

The different colors are for different rmem_max values:

As you can see, setting rmem_max to 26214400 (dark blue) results in packet loss earlier than smaller values. Linux's default value is 131071 (dark green) looks reasonable.

In these conditions, why does the JBoss documentation recommend to set rmem_max to 26214400?

Is it because UDP traffic is expected to be higher than 350 MBytes/second? I don't think anything would work with more than 1% packet loss anyway...

What am I missing?

Details: I used sysctl -w net.core.rmem_max=131071 (for instance) on both nodes, and used on as server iperf -s -u -P 0 -i 1 -p 5001 -f M and the other as client iperf -c 172.29.157.3 -u -P 1 -i 1 -p 5001 -f M -b 300M -t 5 -d -L 5001 -T 1.