I've built a number of these "all-in-one" ZFS storage setups. Initially inspired by the excellent posts at Ubiquitous Talk, my solution takes a slightly different approach to the hardware design, but yields the result of encapsulated virtualized ZFS storage.

To answer your questions:

Determining whether this is a wise approach really depends on your goals. What are you trying to accomplish? If you have a technology (ZFS) and are searching for an application for it, then this is a bad idea. You're better off using a proper hardware RAID controller and running your VMs on a local VMFS partition. It's the path of least resistance. However, if you have a specific reason for wanting to use ZFS (replication, compression, data security, portability, etc.), then this is definitely possible if you're willing to put in the effort.

Performance depends heavily on your design regardless of whether you're running on bare-metal or virtual. Using PCI-passthrough (or AMD IOMMU in your case) is essential, as you would be providing your ZFS VM direct access to a SAS storage controller and disks. As long as your VM is allocated an appropriate amount of RAM and CPU resources, the performance is near-native. Of course, your pool design matters. Please consider mirrors versus RAID Z2. ZFS scales across vdevs and not the number of disks.

My platform is VMWare ESXi 5 and my preferred ZFS-capable operating system is NexentaStor Community Edition.

This is my home server. It is an HP ProLiant DL370 G6 running ESXi fron an internal SD card. The two mirrored 72GB disks in the center are linked to the internal Smart Array P410 RAID controller and form a VMFS volume. That volume holds a NexentaStor VM. Remember that the ZFS virtual machine needs to live somewhere on stable storage.

There is an LSI 9211-8i SAS controller connected to the drive cage housing six 1TB SATA disks on the right. It is passed-through to the NexentaStor virtual machine, allowing Nexenta to see the disks as a RAID 1+0 setup. The disks are el-cheapo Western Digital Green WD10EARS drives aligned properly with a modified zpool binary.

I am not using a ZIL device or any L2ARC cache in this installation.

The VM has 6GB of RAM and 2 vCPU's allocated. In ESXi, if you use PCI-passthrough, a memory reservation for the full amount of the VM's assigned RAM will be created.

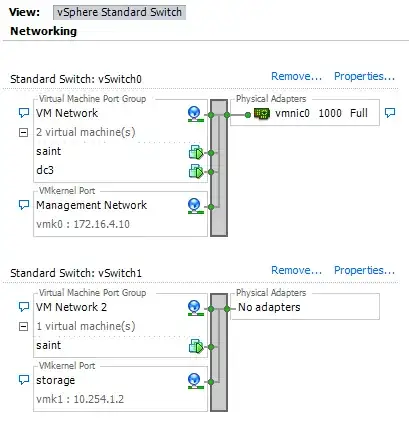

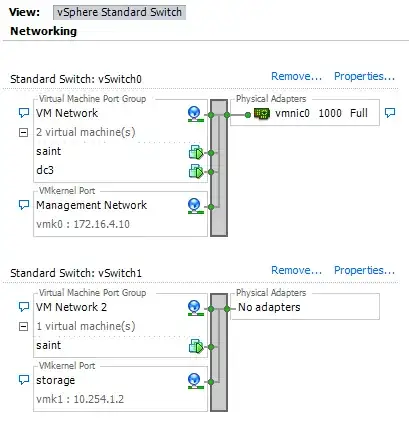

I give the NexentaStor VM two network interfaces. One is for management traffic. The other is part of a separate vSwitch and has a vmkernel interface (without an external uplink). This allows the VM to provide NFS storage mountable by ESXi through a private network. You can easily add an uplink interface to provide access to outside hosts.

Install your new VMs on the ZFS-exported datastore. Be sure to set the "Virtual Machine Startup/Shutdown" parameters in ESXi. You want the storage VM to boot before the guest systems and shut down last.

Here are the bonnie++ and iozone results of a run directly on the NexentaStor VM. ZFS compression is off for the test to show more relatable numbers, but in practice, ZFS default compression (not gzip) should always be enabled.

# bonnie++ -u root -n 64:100000:16:64

Version 1.96 ------Sequential Output------ --Sequential Input- --Random-

Concurrency 1 -Per Chr- --Block-- -Rewrite- -Per Chr- --Block-- --Seeks--

Machine Size K/sec %CP K/sec %CP K/sec %CP K/sec %CP K/sec %CP /sec %CP

saint 12G 156 98 206597 26 135609 24 410 97 367498 21 1478 17

Latency 280ms 3177ms 1019ms 163ms 180ms 225ms

Version 1.96 ------Sequential Create------ --------Random Create--------

saint -Create-- --Read--- -Delete-- -Create-- --Read--- -Delete--

files:max:min /sec %CP /sec %CP /sec %CP /sec %CP /sec %CP /sec %CP

64:100000:16/64 6585 60 58754 100 32272 79 9827 58 38709 100 27189 80

Latency 1032ms 469us 1080us 101ms 375us 16108us

# iozone -t1 -i0 -i1 -i2 -r1m -s12g

Iozone: Performance Test of File I/O

Run began: Wed Jun 13 22:36:14 2012

Record Size 1024 KB

File size set to 12582912 KB

Command line used: iozone -t1 -i0 -i1 -i2 -r1m -s12g

Output is in Kbytes/sec

Time Resolution = 0.000001 seconds.

Throughput test with 1 process

Each process writes a 12582912 Kbyte file in 1024 Kbyte records

Children see throughput for 1 initial writers = 234459.41 KB/sec

Children see throughput for 1 rewriters = 235029.34 KB/sec

Children see throughput for 1 readers = 359297.38 KB/sec

Children see throughput for 1 re-readers = 359821.19 KB/sec

Children see throughput for 1 random readers = 57756.71 KB/sec

Children see throughput for 1 random writers = 232716.19 KB/sec

This is a NexentaStor DTrace graph showing the storage VM's IOPS and transfer rates during the test run. 4000 IOPS and 400+ Megabytes/second is pretty reasonable for such low-end disks. (big block size, though)

Other notes.

- You'll want to test your SSDs to see if they can be presented directly to a VM or if the DirectPath chooses the entire motherboard controller.

- You don't have much CPU power, so limit the storage unit to 2 vCPU's.

- Don't use RAIDZ1/Z2/Z3 unless you really need the disk space.

- Don't use deduplication. Compression is free and very useful for VMs. Deduplication would require much more RAM + L2ARC in order to be effective.

- Start without the SSDs and add them if necessary. Certain workloads don't hit the ZIL or L2ARC.

- NexentaStor is a complete package. There's a benefit to having a solid management GUI, however, I've heard of success with Napp-It as well.