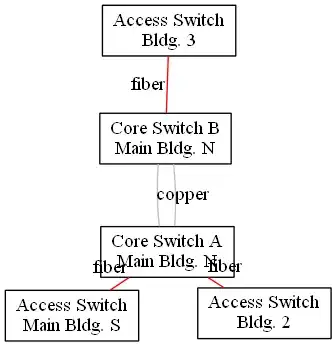

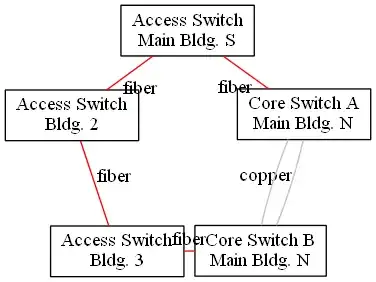

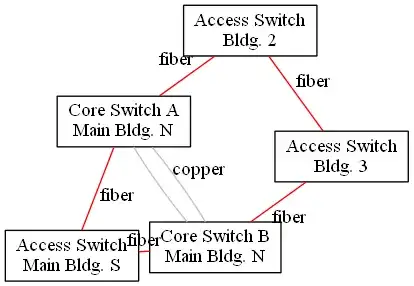

We are planning to purchase new switches for our network. We have three buildings in the same area that are all connected by fiber. Our main building is very large and needs to be connected by fiber from north to south. North is where our core switches will reside.

My question is this: We will have three fiber connections coming into our core switches - 1 from each remote building and 1 from the south side of the plant. Can we use two switches in the north plant each with 2 fiber ports to accomodate this? This would leave 1 fiber port open. We would be trunking the two core switches by ethernet ports. Will this work ok or do we need to trunk by the fiber ports?