I have large static content that I have to deliver via a Linux-based webserver. It is a set of over one million small, gzip files. 90% of the files are less than 1K and the remaining files are at most 50K. In the future, this could grow to over 10 million gzip files.

Should I put this content in a file structure or should I consider putting all this content in a database? If it is in a file structure, can I use large directories or should I consider smaller directories?

I was told a file structure would be faster for delivery, but on the other side, I know that the files will take a lot of space on the disk, since files blocks will be more than 1K.

What is the best strategy regarding delivery performance?

UPDATE

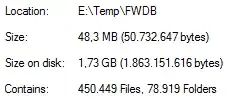

For the records, I have performed a test under Windows 7, with half-million files: