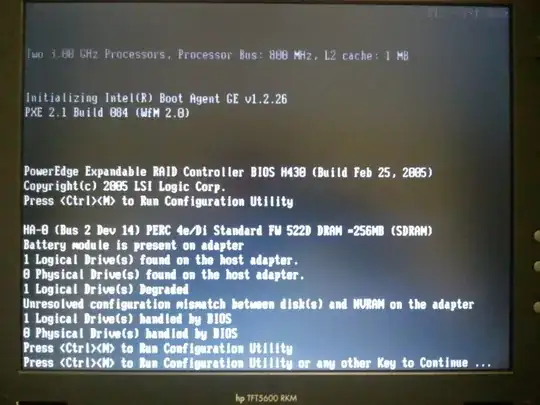

We had an extended power outage (almost four hours); now I have a Dell PowerEdge 2850 server which is giving me this error (from a PERC 4e/DI) on boot up:

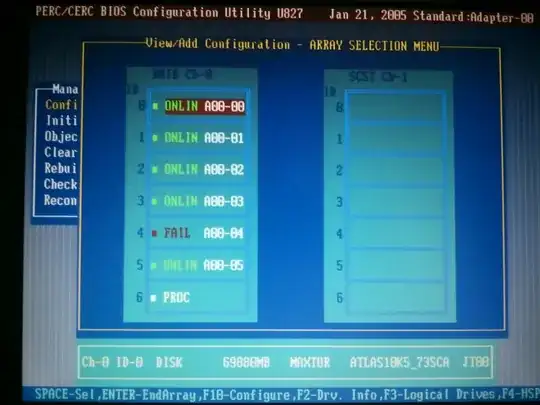

All drives are listed in the PERC configuration menu:

The failed drive does not show any indications of failure on the LEDs; the bottom appears to be green and the top is unlit. None of the disk drive LEDs are flashing.

All drives are in one RAID5 array with 6 stripes of 64K in size.

I do have one or two spare Dell PE2850s available for test. However, from what I've read, the Unresolved configuration mismatch... error would show up then - but perhaps I could activate the good drives anyway.

What if I remove the bad drive and try to boot that way? I may try that - but both the PERC 4e/DI and the Adaptec 2410SA card (activated later in the boot process) list all ports as not functioning.

Here are the specific questions:

- Is it possible to get this (degraded) array running again on this system? How?

- Will it help to configure a new configuration and save it (without initializing)?

- Is it possible to move a degraded array to a new system and power up?

- What if the "bad" disk was removed or replaced? How does that affect the system boot? How does that affect a disk array move?

EDIT: I found this question which appears to detail how to move drives from one host to another; is there anything more that should be added to the process detailed there? In my case, the move would be different in two ways: one, I have an apparently degraded array (RAID5), and two, the array is RAID5 not RAID1. The first is the biggest question mark; RAID5 should import just like RAID1 I'd say.

I found this question which talks about "repairing" a failed mirror, but there is no clear answer on how to fix it, and I'm using a RAID5 anyway - a RAID5 which hasn't been moved or rearranged.

UPDATE: The replacement system has a PERC 4/DC in it - compared to the old system which has a PERC 4e/Di in it. I hope this will recognize the old (degraded) array and import it just fine. If this works well, I'll even be able to use the old drives (no failures there) as a replacement for the failed drive.