How are your files / filegroups set up?

I'll plagiarize myself:

One more thought on IO: we were careful to set up our biggest most often used tables to be on filegroups with multiple files in them. One of the performance enhancements of this is that SQL will thread requests to each file in the filegroup - so if BigOverUsedTable is on FileGroup1 and FileGroup1 has four files in it and your DB has 8 cores, it will actually use four cores to do "select big number crunching nasty query from BigOverUsedTable" - whereas otherwise, it will only use one CPU. We got this idea from this MSDN article:

http://msdn.microsoft.com/en-us/library/ms944351.aspx

From TFA:

"Filegroups use parallel threads to improve data access. When a table is accessed sequentially, the system creates a separate thread for each file in parallel. When the system performs a table scan for a table in a filegroup with four files, it uses four separate threads to read the data in parallel. In general, using multiple files on separate disks improves performance. Too many files in a filegroup can cause too many parallel threads and create bottlenecks."

We have four files in our filegroup on an 8 core machine due to this advice. It's working out well.

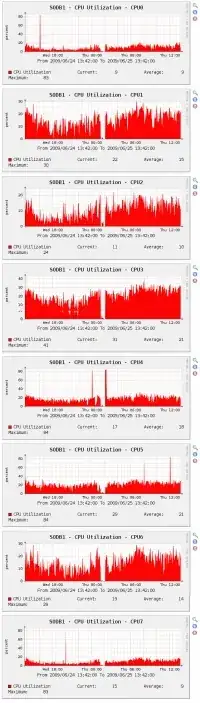

Edit: this has another (possibly) better answer now. The graphs were off on scale - if you look closely, each processor is actually about 20% loaded as uzbones points out.

Edit: We can actually tell that using multiple file filegroups helps, because we didn't put all our tables in the filegroup with four files. Big queries on the "single file" filegroup only use one CPU, but queries on the table in the four file filegroup hit 4 CPU's.