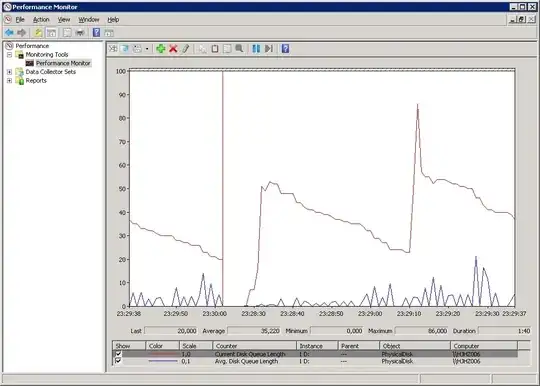

A few days ago disk on my server started to have large queue length:

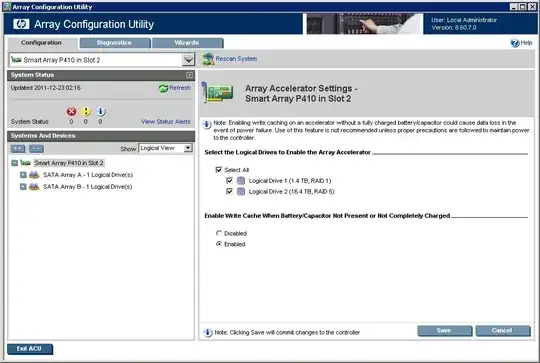

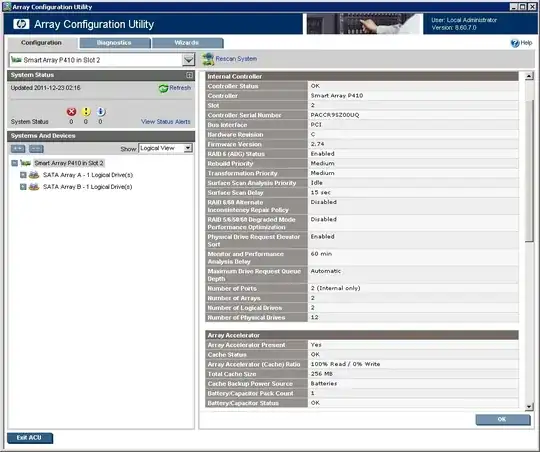

I've changed controller battary recently, HP configuration utility was saying the battery is bad, but after changing the battery nothing changed. HP configuration utility is now saying everything is fine but the queue is still the same

What can I do to eliminate the problem? Maybe I should change the controller?

UPDATE 1 (gtapscott's questions):

1) This is a read queue, I added a separate read queue counter and it matched with overall queue length. Write queue is empty.

2) Avg. disk queue varies from 0 to several hundreds, average value is about 100-200. I'm not sure but I feel this counter acts like there is no controller cache at all.

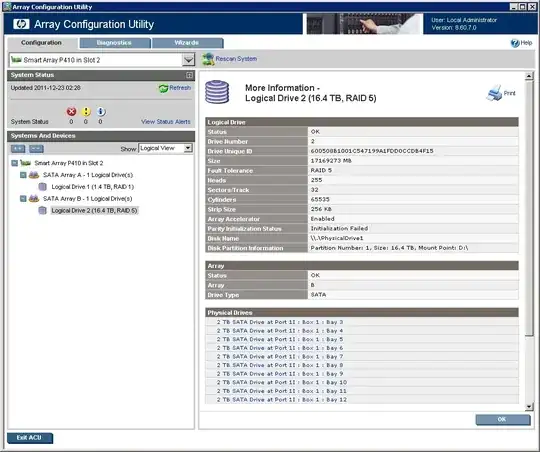

3) There is 10 disks in RAID-5

UPDATE 2 (ewwhite's post):

Yes, I rebooted the server after the battary change

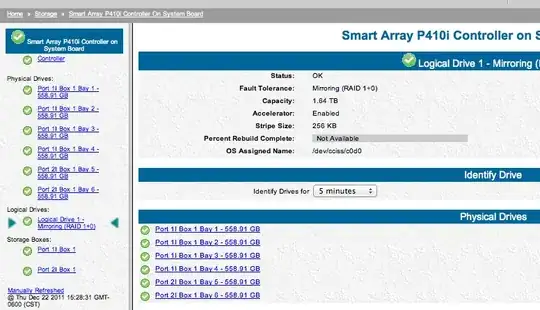

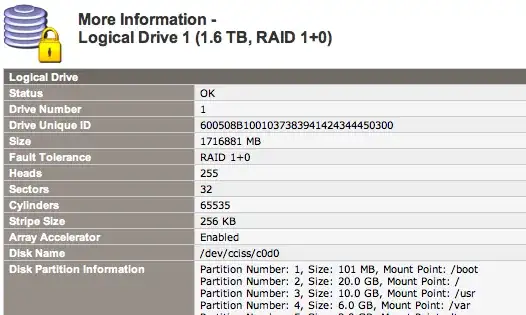

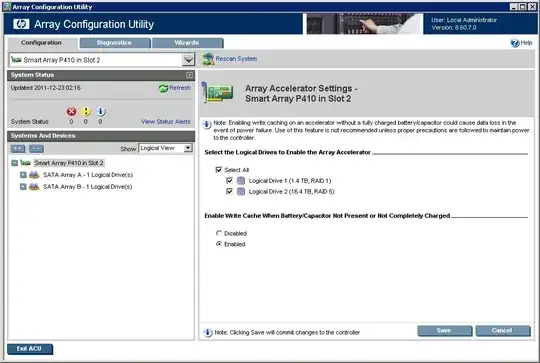

I have slightly difference interface, here it is:

So cache is enabled on the RAID massive

UPDATE 3:

The problem was in one of the RAID disks as ewwhite suggested