From what I am reading here most people are going for massive over kill. You have 5 server and 30 work stations, so since this is a small company by the sounds of it I very much doubt the boss/owner will spring for a biometric scanner, pass card scanner and video system as some examples unless you already have these and it would be a cheap add on for the server room.

So I did one almost the same as what you had, 20 workstations & 8 servers.

Here is what I found went well and was a good cost on the typical limited budget of a small company.

- Phone or at least a phone jack where you can move a phone in should you have to call tech support while in front of the physical server

- A/C, even with 8 server my room temp was about 31 degrees Celsius.

For this since the room was in the center of the building and new duct work was out of the question we got one of the portable room ones and exhausted it into the office on the side of the wall closest to the cold air return for the building a/c unit. This worked well and dropped the temp to about 23 degrees but it does take up a lot of space but is really good.

- Don't bother with a rack mount monitor/KVM, I can think of better things to spend $1k on.

- Get a monitor and a 10 ft VGA cable. Have a table or wall mounted shelf next to the servers, any modern rack mounted server has found mounted VGA port

- Get a wireless keyboard/mouse that use the same dongle. Just move the VGA cable and wireless dongle to the computer you need access too. Since you will be accessing everything remotely 90% of the time its not a big deal to do it this way

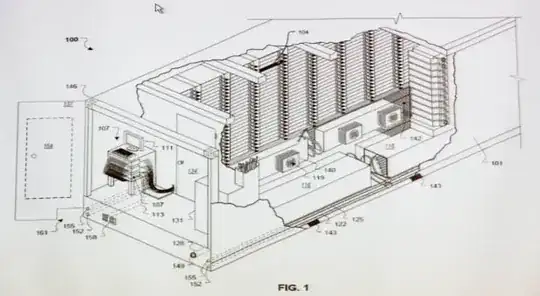

- If you can get a rack and use rack mounted servers, BUT make sure you get rails that allow the server to be slid out should you need to access the hardware rather then one then needs to be unbolted

- If you can't get a rack, go to home depot and get the heavy duty freestanding utility shelves, this will work just as well for desktop units

- Lock, a key or keypad one is fine, you'll have to give a key and the combo to your boss or owner anyway, make sure the servers log who logs into them and it doesn't matter as much who is in the room.

- If the phone gear is there use the plywood on the wall idea

- Put the phones on their own power souce UPS

- Get a UPS that is expandable for the servers

- Get a UPS that has load banks you can remotely switch off should you need to kill server remotely (Tripplight has these, saved me a few times when I had a wonkly server that would lock up and needed the power killed) Make sure you set the bios to power on after a power outage for all servers. The UPS will only have a few plugs so you have to put your critical servers on it, for me it was a DC/GC and email, this allowed me to reboot those if they crashed

- Some shelves for parts and the other "stuff" you'll be required to keep in there by the boss (lol unless you are the boss)

- Dedicated power for the A/C

- Dedicated power for the Phones

- Dedicated power for the servers

I had a totally of 6 15amp circuits in mine, plus the lights

- Make any patch cables custom and have them as short as they can be, this will have keep it organized so you don't have them dangling everywhere.

- If you cannot put it on the second floor, put all the servers on the top rack and work your way down. mine was on the second floor so not an issue for me.

This is what I had in mine, network wiring, etc was all labeled and well organized as well, how that setup is dependent on where it comes in.

Biggest things, keep it organized, and make sure you have room to expand for future growth.