We have a new VNX5300 waiting to get configured, and I need to plan out the network infrastructure before the EMC tech arrives. It has 4x1gbit iSCSI per SP (8 ports in total), and I'd like to get the most out of the performance until we jump over to 10gig iSCSI.

From what I can read from the docs - the recommendation is to use only two ports per SP, with 1 active and 1 passive. Why is this? It seems kind of pointless to have quad-port i/o-modules and then recommend to not use more than two of them?

Also - I'm a bit unsure about the zoning. The best practices guide state that you should separate each port on each SP from each other on different logical networks. Does this mean that I have to create 4 logical networks to be able to use all 8 ports?

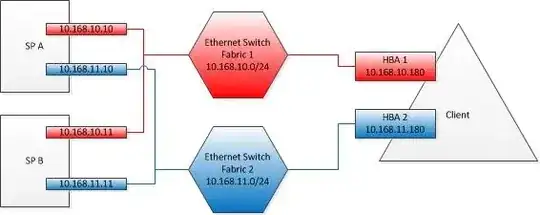

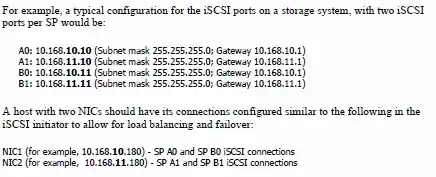

It also gives the following example:

Does this mean that A0 and B0 should sit on the same physical switch aswell? Won't this make all traffic go on one switch (if both A1 and B1 are passive)?

Edit: Another brainpuzzle

I don't get it - each host (as in server) should not have more iSCSI bandwidth available than the storage processor. What on earth does this matter? If serverA have 1gbit and serverB have 100mbit, then the resulting bandwith between them is 100mbit. How can this result in some kind of oversubscription?

Edit4: Wait, what. Active and passive ports? The VNX runs in a ALUA configuration with asymmetrical active/active.. there shouldn't be any passive ports, only preferred ones..