I have an interesting problem: Our load balancer (a FiberLogic OptiQroute 2140) seems to consistently favor one WAN connection over another (and not one I want it to).

We have two WAN connections from different service providers. One is a SDSL connection at 3Mbps (WAN1) and the other is provided by a cable modem (WAN2) at 4Mbps/1Mbps. Our publically available services all sit on a /24 segment on the WAN1 connection. This includes our mail gateways, DNS, access to Exchange's OWA, various sundry websites and a few well-used VPN services. We get quite a bit of inbound traffic on this side of the load balancer.

The other side (WAN2) is largely unused for inbound traffic. The load balancer is accordingly configured to weight this side. We want to push as much outgoing traffic out the WAN2 connection as possible. In practice this does not occur. What will happen is that a combination of inbound traffic (the SMTP gateway sending email, contractors using the VPN, remote users accessing OWA, etc.) will consume about 1/3 to 1/2 of the available bandwidth on WAN1. Then 300 or so users all hop on Facebook. Some of this traffic will head out WAN2, but enough of it is assigned to the WAN1 connection that it will saturate the link and then everything runs slow for everybody. Really slow. Meanwhile there's still 2Mbps worth of bandwidth space being unused on the other connection. This happens frequently enough to be more than a nuisance.

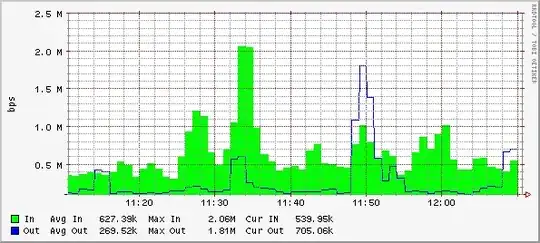

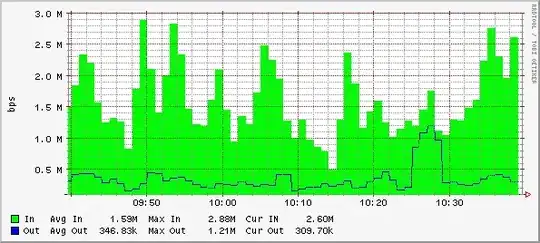

WAN1:

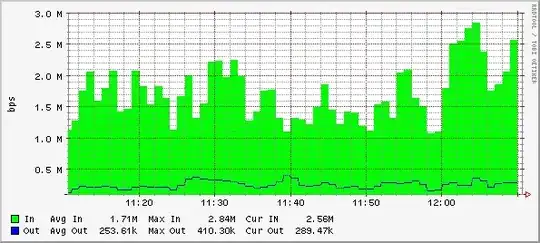

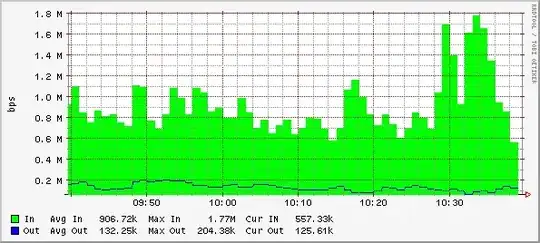

WAN2:

So as you can see from the Rrdtool graphs while WAN1 is hovering around 2.5-3Mbps during peak usage, WAN2 seems to always stay around 1-2Mbps range. The Priority and Weighted values have been set the maximum value (65535) for WAN2 and set to 10 for WAN1. The manual has this to say about these configurations options:

Priority: Enter a priority value for the WAN

port. The range is between 0 and 65535.

Weighted: Enter a weight value for the WAN

port. The range is between 0 and 65535.

The higher the weight placed on an interface, the more opportunity for this interface to transmit data.

For example, when the device makes use of 2 WAN ports. WAN 1 has a weighted value of 1 and WAN 2 has a weighted value of 3.

The transmission will be like the following:

At t=0, WAN 1 transmits

At t=1, WAN 2 transmits

At t=2, WAN 2 transmits

At t=3, WAN 2 transmits

At t=4, WAN 1 transmits

So what am I doing wrong here? Theoretically by setting the Priority and Weighted values for WAN2 so high, that unless a packet is already part of an established flow started by inbound traffic on WAN1, nine times out of ten it should be sent out WAN2. In fact, this is the behavior we want out of the OptiQroute; if a packet's not already part of established inbound flow, send it out WAN2. WAN1 should essentially be used just for serving our /24 public network segment.

How can I configure the OptiQroute to get this behavior?