On a network interface, speeds are given in term of data over time, in particular, they are bits per second. However, in the uber-fast world of computing -- a second is kind of a really long time.

So for example, given a linear falloff. A 1 GBit per second interface would do 500MBit per half second, 250Mbit per quarter second etc.

I imagine at certain units of time, this is no longer linear. Perhaps this is set by ethernet frequencies, system clock speeds, interrupt timers etc. I am sure this varies depending on the system -- but does anyone have more information or whitepapers on this?

One of the main reasons I am curious is to understand output drops on interfaces. Even if the speed per second is much lower than the interface can handle -- perhaps there are spikes that cause drops for only small numbers of milliseconds. Perhaps various coalescing would hide this effect -- or perhaps increase it on the receiving interface? Do queues make a difference here?

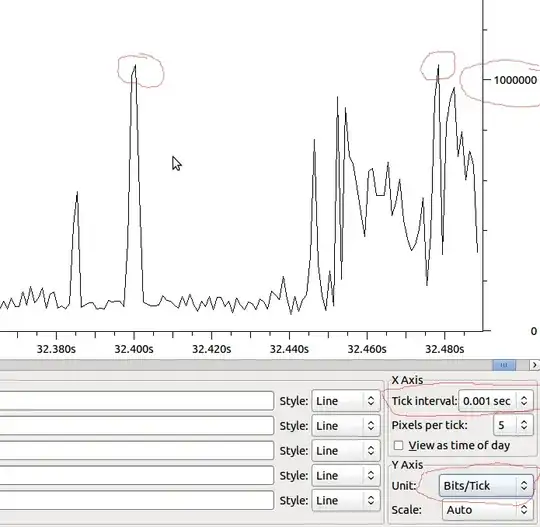

Example:

So given if this is linear down to the MS we would have 1Mbit/MS, and if Wireshark isn't distorting what I see, should I see drops when I have a spike beyond 1Mbit?