I have an HP ProLiant DL380 G7 server running as a NexentaStor storage unit. The server has 36GB RAM, 2 LSI 9211-8i SAS controllers (no SAS expanders), 2 SAS system drives, 12 SAS data drives, a hot-spare disk, an Intel X25-M L2ARC cache and a DDRdrive PCI ZIL accelerator. This system serves NFS to multiple VMWare hosts. I also have about 90-100GB of deduplicated data on the array.

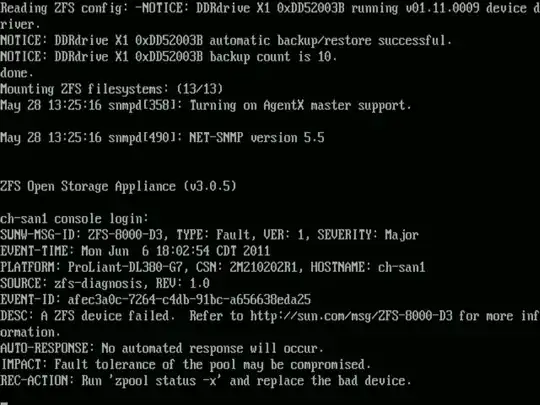

I've had two incidents where performance tanked suddenly, leaving the VM guests and Nexenta SSH/Web consoles inaccessible and requiring a full reboot of the array to restore functionality. In both cases, it was the Intel X-25M L2ARC SSD that failed or was "offlined". NexentaStor failed to alert me on the cache failure, however the general ZFS FMA alert was visible on the (unresponsive) console screen.

The zpool status output showed:

pool: vol1

state: ONLINE

scan: scrub repaired 0 in 0h57m with 0 errors on Sat May 21 05:57:27 2011

config:

NAME STATE READ WRITE CKSUM

vol1 ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

c8t5000C50031B94409d0 ONLINE 0 0 0

c9t5000C50031BBFE25d0 ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

c10t5000C50031D158FDd0 ONLINE 0 0 0

c11t5000C5002C823045d0 ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

c12t5000C50031D91AD1d0 ONLINE 0 0 0

c2t5000C50031D911B9d0 ONLINE 0 0 0

mirror-3 ONLINE 0 0 0

c13t5000C50031BC293Dd0 ONLINE 0 0 0

c14t5000C50031BD208Dd0 ONLINE 0 0 0

mirror-4 ONLINE 0 0 0

c15t5000C50031BBF6F5d0 ONLINE 0 0 0

c16t5000C50031D8CFADd0 ONLINE 0 0 0

mirror-5 ONLINE 0 0 0

c17t5000C50031BC0E01d0 ONLINE 0 0 0

c18t5000C5002C7CCE41d0 ONLINE 0 0 0

logs

c19t0d0 ONLINE 0 0 0

cache

c6t5001517959467B45d0 FAULTED 2 542 0 too many errors

spares

c7t5000C50031CB43D9d0 AVAIL

errors: No known data errors

This did not trigger any alerts from within Nexenta.

I was under the impression that an L2ARC failure would not impact the system. But in this case, it surely was the culprit. I've never seen any recommendations to RAID L2ARC. Removing the bad SSD entirely from the server got me back running, but I'm concerned about the impact of the device failure (and maybe the lack of notification from NexentaStor as well).

Edit - What's the current best-choice SSD for L2ARC cache applications these days?