I didn't understand that at the first time when I was reading the introduction from https://www.nginx.com/blog/rate-limiting-nginx/.

Now I am sure I understand and my answer is so far the best. :)

Suppose: 10r/s is set, the server's max capability is e.g. 10000r/s which is 10r/ms and there is only 1 client at the moment.

So here's the main difference between 10r/s per IP burst=40 nodelay and 10r/s per IP burst=40.

As the https://www.nginx.com/blog/rate-limiting-nginx/ documented (I strongly recommend reading the article first(except the Two-Stage Rate Limiting section)), this behaviour fixes one problem. Which one?:

In our example, the 20th packet in the queue waits 2 seconds to be

forwarded, at which point a response to it might no longer be useful

to the client.

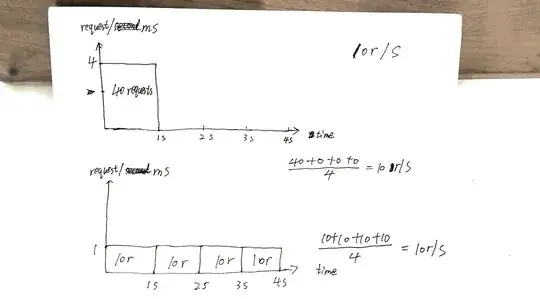

Check the draft I made, the 40th request gets response at 1s while the other 40th gets response at 4s.

This can make the best use of the server's capability: sends back responses as quick as possible while still keeping the x r/s constraint to a given client/IP.

But there's also cost here. The cost will be:

If you have many clients queuing on the server let's say client A, B and C.

Without nodelay, the requests are served in an order similar to ABCABCABC.

With nodelay, the order is more likely to be AAABBBCCC.

I would like to sum up the article https://www.nginx.com/blog/rate-limiting-nginx/ here.

Above all, the most important configuration is x r/s.

x r/s only, excess requests are rejected immediately.

x r/s + burst, excess requests are queued.

1. vs 2., the cost is that on the client side, the queued requests take up the chances of later reuqests which will have had the chance of getting served.

For example, 10r/s burst=20 vs 10r/s, the 11th request is supposed to be rejected immediately under the latter condition, but now it is queued and will be served. The 11th request takes up the 21th request's chance.

x r/s + burst + nodelay, already explained.

P.S. The Two-Stage Rate Limiting section of the article is very confusing. I don't understand but that doesn't seem to matter.

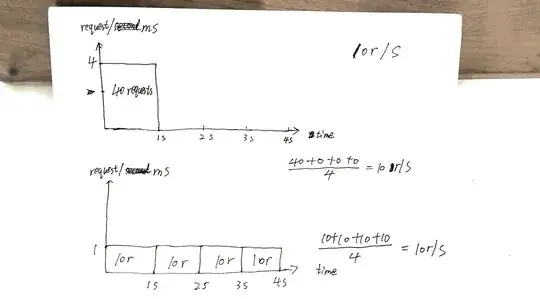

For example:

With this configuration in place, a client that makes a continuous stream of

requests at 8 r/s experiences the following behavior.

8 r/s? seriously? There are 17 requests within 3 seconds shown in the image, 17 / 3 = 8?