There has been some debate in the comments to Chopper3's answer that is not well informed because of some poorly understood aspects of Equallogic's networking requirements and multipathing behaviour.

First the VMware side:

For starters on the ESXi side the current recommendation, when using the iSCSI Software Initiator, from VMware (for ESX\ESXi 4.1) and Dell is that you should have a single physical Nic mapped to each VMkernel Port that will be used for iSCSI. The binding process that is now recommended enforces this. It requires that you have only one active physical nic and no standby nics for each VMkernel port. No bonding allowed. Now you can cheat this and go back afterwards and add a failover nic but the intention is that MPIO will handle the failover so this serves no useful purpose (at least when everything is working as intended by VMware).

The default multipathing policy will allow active, active connections to an Equallogic array using round robin.

Second the Equallogic side:

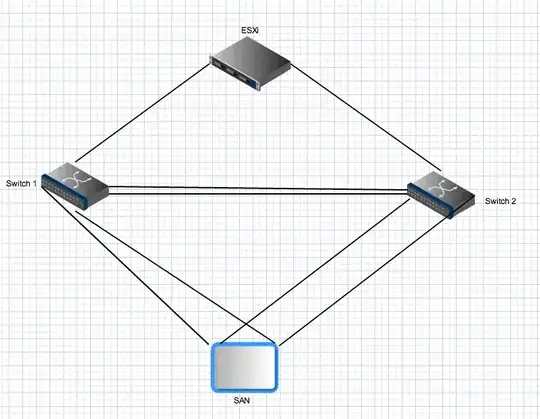

Equallogic arrays have dual controllers that act in an active\standby mode. For the PS4000 these have two Gigabit Nics on each controller. For the active controller both of these nics are active and can receive IO from the same source. The network configuration recommends that the array's nics should be connected to separate switches. From the server side you have multiple links that should also be distributed to separate switches. Now for the odd part - Equallogic arrays expect that all initiator ports can see all active ports on the arrays. This is one of the reasons you need a trunk between the two switches. That means that with a host with two VMkernel iSCSI ports and a single PS4000 there are 4 active paths between the initator and the target - two are "direct" and two traverse the ISL.

For the standby controller's connections the same rules apply but these nics will only become active after a controller failover and the same principles apply. After failover in this environment there will still be four active paths.

Third for more advanced multipathing:

Equallogic now have a Multipath Extension Module that plugs into the VMware Plugable Storage Architecture that provides intelligent load balancing (using Least queue depth, Round Robin or MRU) across VMkernel ports. This will not work if all vmkernel uplink nics are not able to connect to all active Equallogic ports. This also ensures that the number of paths actually used remains reasonable - in large Equallogic environments the number of valid paths between a host and an Equallogic Group can be very high because all target nics are active, and all source nics can see all target nics.

Fourth for larger Equallogic Environments:

As you scale up an Equallogic environment you add additional arrays into a shared group. All active ports on all member arrays in a group must be able to see all other active ports on all other arrays in the same group. This is a further reason why you need fat pipes providing inter switch connectiongs between all switches in your Equallogic iSCSI fabric. This scaling also dramatically increases the number of valid active paths between initiators and targets. With an Equallogic Group consisting of 3 PS6000 arrays (four nics per controller vs 2 for the PS4000), and an ESX host with two vmkernel ports, there will be 24 possible active paths for the MPIO stack to choose from.

Fifth Bonding\link aggregation and Inter Switch links in an Equallogic Environment:

All of the inter array and initator<->array connections are single point to point Gigabit connections (or 10Gig if you have a 10Gig array). There is no need for, and no benefit to be gained from, bonding on the ESX server side and you cannot bond the ports on the Equallogic arrays. The only area where link aggregation\bonding\whatever-you-want-to-call it is relevant in an Equallogic switched ethernet fabric is on the interswitch links. Those links need to able to carry concurrent streams that can equal the total number of active Equallogic ports in your environment - you may need a lot of aggregate bandwidth there even if each point to point link between array ports and iniatator ports is limited to 1gbps.

Finally:

In an Equallogic environment traffic from a host (initiator) to an array can and will traverse the interswitch link. Whether a particular path does so depends on the source and destination ip-address for that particular path but each source port can connect to each target port and at least one of those paths will require traversing the ISL. In smaller environments (like this one) all of those paths will be used and active. In larger environments only a subset of possible paths are used but the same distribution will happen. The aggregate iSCSI bandwidth available to a host (if properly configured) is the sum of all of its iSCSI vmkernel port bandwidth, even if you are connecting to a single array and a single volume. How efficient that may be is another issue and this answer is already far too long.