We are looking to move our infrastructure from our office to a COLO.

Currently we run a rack-mount white-box server using commodity hardware, and ESXi 4 as the hypervisor to power 9 VM's for internal development/DC/Exchange etc.

We are looking to use a SAN for storage, and have come up with a network diagram which allows us to use the spare ethernet port on the physical server to attach to another server - which is proposed to be used by the SAN.

The question is, is an ethernet port sufficient for this application? It is a gigabit ethernet port. I have used fibre in the past for this, but not ethernet.

These guys (http://www.datacore.com/) have a method of providing SAN over ethernet.

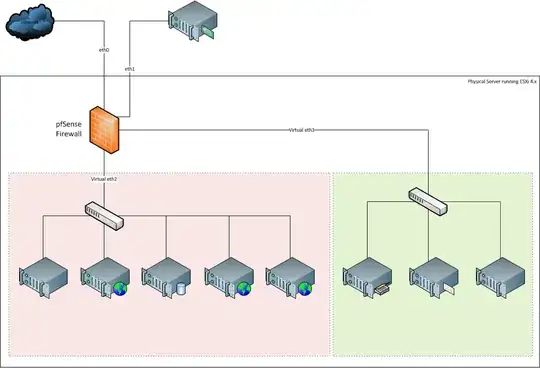

The proposed physical architecture is as follows:

With the virtual machines looking a bit like this (onbviously the connection between pfSense and eth1 would be removed if the top server was a SAN ):