We're getting a pair of new 8Gb switches for our fibre channel fabric. This is a Good Thing since we're running out of ports in our primary datacenter, and it'll allow us to have at least one 8Gb ISL running between our two datacenters.

Our two datacenters are about 3.2km apart as the fibre runs. We've been getting solid 4Gb service for a couple of years now, and I have high hopes it can sustain 8Gb as well.

I'm currently figuring out how to reconfigure our fabric to accept these new switches. Due to cost decisions a couple of years ago we are not running a fully separate double-loop fabric. The cost of full redundancy was seen as more expensive than the unlikely downtime of a switch failure. That decision was made before my time, and since then things haven't improved much.

I would like to take this opportunity to make our fabric more resilient in the face of a switch failure (or FabricOS upgrade).

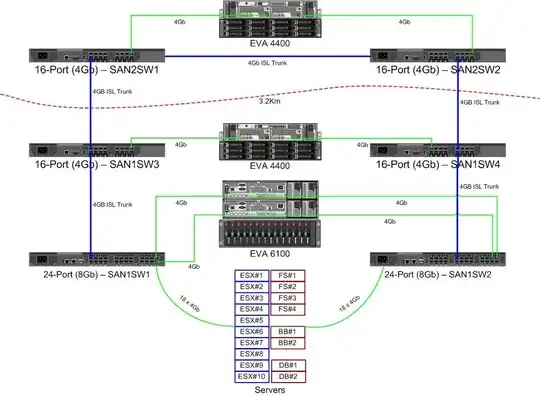

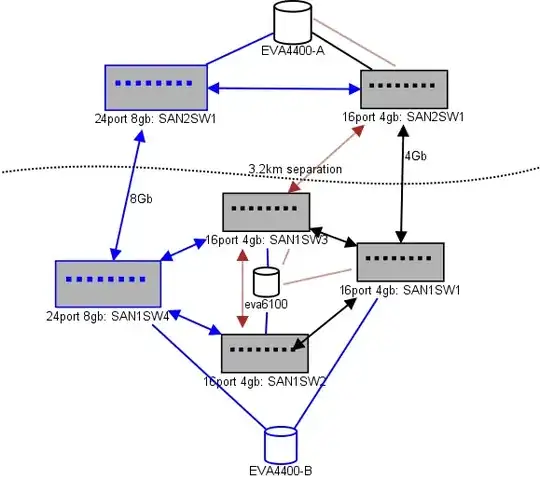

Here is a diagram of what I'm thinking for a lay-out. Blue items are new, red items are existing links that will be (re)moved.

(source: sysadmin1138.net)

The red arrowed line is the current ISL switch link, both ISLs are coming from the same switch. The EVA6100 is currently connected to both of the 16/4 switches that have an ISL. The new switches will allow us to have two switches in the remote DC some one of the long-range ISLs are moving to the new switch.

The advantage to this is that each switch is no more than 2 hops from another switch, and the two EVA4400's, which will be in an EVA-replication relationship, are 1 hop from each other. The EVA6100 in the chart is an older device that will eventually be replaced, probably with yet another EVA4400.

The bottom half of the chart is where most of our servers are, and I'm having some concerns about exact placement. What needs to go in there:

- 10 VMWare ESX4.1 hosts

- Accesses resources on the EVA6100

- 4 Windows Server 2008 servers in a one fail-over cluster (file-server cluster)

- Accesses resources on both the EVA6100 and the remote EVA4400

- 2 Windows Server 2008 servers in a second fail-over cluster (Blackboard content)

- Accesses resources on the EVA6100

- 2 MS-SQL database servers

- Accesses resources on the EVA6100, with nightly DB exports going to the EVA4400

- 1 LTO4 tape library with 2 LTO4 tape drives. Each drive gets its own fibre port.

- The backup servers (not in this list) spool to them

At the moment the ESX cluster can tolerate up to 3, maybe 4, hosts going down before we have to start shutting VMs down for space. Happily, everything has MPIO turned on.

The current 4Gb ISL links haven't come close to saturation that I've noticed. That may change with the two EVA4400's replicating, but at least one of the ISLs will be 8Gb. Looking at the performance I'm getting out of EVA4400-A I am very certain that even with replication traffic we will have a hard time crossing the 4Gb line.

The 4-node file-serving cluster can have two nodes on SAN1SW4 and two on SAN1SW1, as that'll put both storage arrays one hop away.

The 10 ESX nodes I'm somewhat head-scratchy over. Three on SAN1SW4, three on SAN1SW2, and four on SAN1SW1 is an option, and I'd be very interested to hear other opinions on layout. Most of these do have dual-port FC cards, so I can double-run a few nodes. Not all of them, but enough to allow a single switch to fail without killing everything.

The two MS-SQL boxes need to go on SAN1SW3 and SAN1SW2, as they need to be close to their primary storage and db-export performance is less important.

The LTO4 drives are currently on SW2 and 2 hops from their main streamer, so I already know how that works. Those can remain on SW2 and SW3.

I'd prefer not to make the bottom half of the chart a fully-connected topology as that would reduce our usable port-count from 66 to 62, and SAN1SW1 would be 25% ISLs. But if that's strongly recommended I can go that route.

Update: Some performance numbers that will probably be useful. I had them, I just spaced that they're useful for this kind of problem.

EVA4400-A in the above chart does the following:

- During the work-day:

- I/O ops average under 1000 with spikes to 4500 during file-server cluster ShadowCopy snapshots (lasts about 15-30 seconds).

- MB/s generally stays in the 10-30MB range, with spikes up to 70MB and 200MB during ShadowCopies.

- During the night (backups) is when it really pedals fast:

- I/O ops average around 1500, with spikes up to 5500 during DB backups.

- MB/s varies a lot, but runs about 100MB for several hours, and pumps an impressive 300MB/s for about 15 minutes during the SQL export process.

EVA6100 is a lot more busy, since it is the home to the ESX cluster, MSSQL, and an entire Exchange 2007 environment.

- During the day I/O ops average about 2000 with frequent spikes up to around 5000 (more database processes), and MB/s averaging between 20-50MB/s. Peak MB/s happens during ShadowCopy snapshots on the file-serving cluster (~240MB/s) and lasts for less than a minute.

- During the night the Exchange Online Defrag that runs from 1am to 5am pumps I/O Ops to the line at 7800 (close to flank speed for random access with this number of spindles) and 70MB/s.

I would appreciate any suggestions you may have.