I have got a unique problem with one of my servers. The disk I/O statistic is consistently increasing for last couple of weeks. See this graph from Munin:

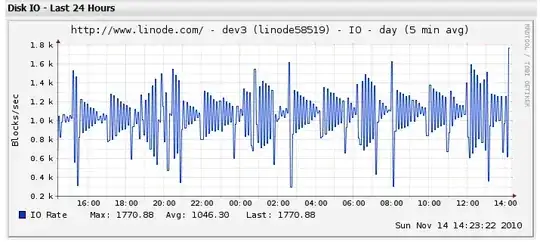

From Linode's dashboard, I see a more fine-grained picture of disk I/O. Here is the cyclical / rhythmic graph (a day's interval). But do note that even though it appears cyclical, over a period of weeks, average disk I/O is increasing consistently (see above graph):

Now, I did iotop and saw that kjournald is the only process doing writing for disk I/O (apart from the occassional rsyslogd -- but the frequency of disk I/O of kjournald is much, much higher). In the graphs above, the read component of I/O is practically zero.

Why is kjournald writing even when there is no other process writing? Why is size of writes getting larger by the day?

Another clue: free memory is also monotonically decreasing while "buffers" is increasing. See this graph:

PS: the server is Apache only. Access logs are disabled, but error logs are enabled. Serving about 80 requests/second. We use Redis as queue. My disk is using ext3.