OK, this was asked a while back, and I'm late to the party. Still, there is something to add here.

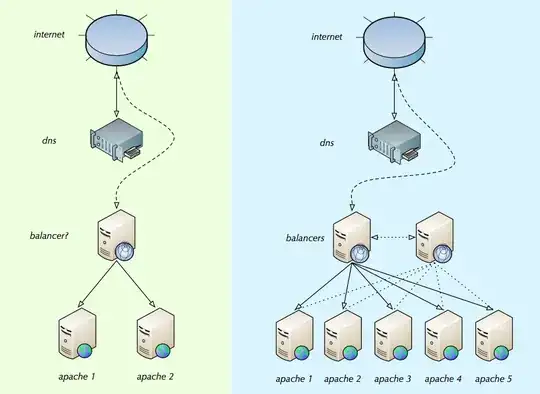

Jackie, you've pretty much nailed it. Your illustration shows how load balancing is handled on most smaller and midsized installations.

You should read the load balancing introduction by Willy Tarreau that Nakedible linked to. It is still valid, and it's a good introduction.

You need to consider how these fit your needs:

- TCP/IP level load balancers (Linux Virtual Server et al). Lowest per connection overhead, highest speed, cannot "see" HTTP.

- HTTP level load balancers (HAProxy, nginx, Apache 2.2, Pound, Microsoft ARR, and more). Higher overhead, can see HTTP, can gzip HTTP, can do SSL, can do sticky session load balancing.

- HTTP reverse proxies (Apache Traffic Server, Varnish, Squid). Can store cache-able objects (some webpages, css, js, images) in RAM and forward them to subsequent clients without involving the backend webserver. Can often do some of the same things that L7 HTTP load balancers do.

there is a second balancer as I am sure at some point the balancer would need help too.

Well, sure. But load balancing is simple, and often a single load balancer can go fast. I link to this article, which struck a nerve in the web, as just an example of what performance ballpark a single modern server can provide. Don't use multiple LB's before you need to. When you need to a common approach is IP level load balancers at the very front (or DNS Round Robin), going to HTTP level load balancers, going to proxies & webapp servers.

help on what the "balancer/s" should be and best practices on how to set them up.

The trouble spot is the session state handling, and to some extent failure state behavor. Setting up the load balancers themselves is comparatively straightforward.

If you're just using 2-4 backend webapp servers, the static hashing based on the origin IP address can be viable. This avoids the need for shared session state amongst webapp servers. Each webapp node sees 1/N of the overall traffic, and the customer-to-server mapping is static in normal operation. It's not a good fit for larger installation though.

The two best load balancing algorithms, in the sense that they have benign behavior under high load and even load distribution, are round robin and true random load balancing. Both of these require that your web application has global session state available on webapp nodes. How this is done depends on the webapp tech stack; but there are generally standard solutions available for this.

If neither static hashing, nor shared session state are a good fit for you, then the choice is generally 'sticky session' load balancing and per-server session state. In most cases this works fine, and it is a fully viable choice.

the balancer/s would see how many connections are on each apache instance (via some config list of internal IP's or eternal IPs) and distributes the connections equally

Yeah, some sites use this. There are many names for the many different load balancing algorithms that exist. If you can pick round robin or random (or weighted round robin, weighted random) then I would recommend you do so, for the reasons given above.

Last thing: Don't forget that many vendors (F5, Cisco and others on high-end, fx Coyote Point and Kemp Technologies at more reasonable prices) offer mature load balancing appliances.